Documentation available also in PDF format: ArcadeDB-Manual.pdf.

1. Jump to the Hot Topics

Skip the boring parts and check this out:

-

What is Multi-Model?

-

ArcadeDB supports the following models:

-

Graph (compatible with Gremlin and OrientDB SQL)

-

Document (compatible with the MongoDB driver and MongoDB queries)

-

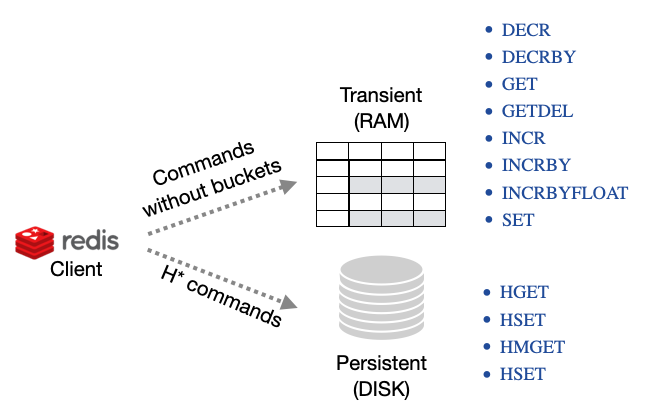

Key/Value (compatible with the Redis driver)

-

Search Engine (LSM-Tree Full-Text Index)

-

Time Series (under construction)

-

Vector (based on the HNSW index)

-

-

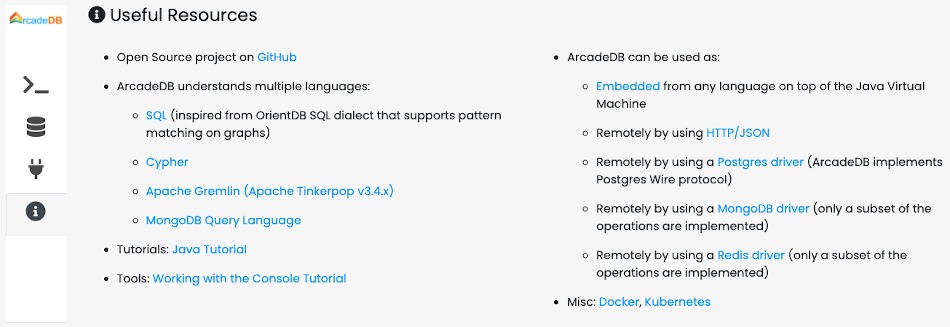

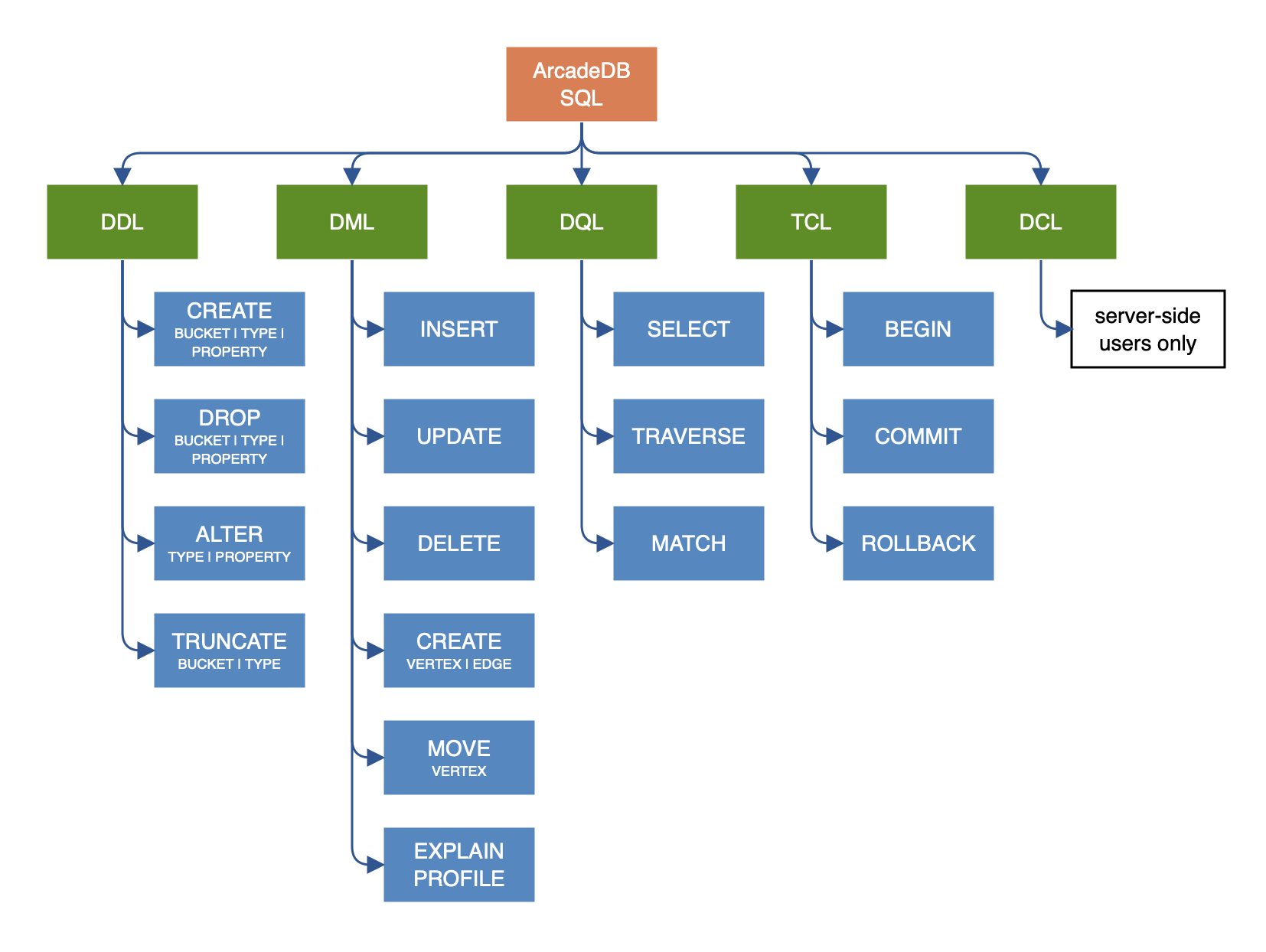

ArcadeDB understands multiple languages, with a SQL dialect as native database query language:

-

SQL (inspired from OrientDB SQL dialect that supports pattern matching on graphs)

-

-

ArcadeDB can be used as:

-

Embedded from any language on top of the Java Virtual Machine

-

Remotely by using HTTP/JSON

-

Remotely by using a Postgres driver (ArcadeDB implements Postgres Wire protocol)

-

Remotely by using a MongoDB driver (only a subset of the operations are implemented)

-

Remotely by using a Redis driver (only a subset of the operations are implemented)

-

-

Getting started with ArcadeDB:

-

Tutorials: Java Tutorial

-

Tools: Working with the Console

-

Containers: Docker, Kubernetes

-

Migrating: from OrientDB

-

-

ArcadeDB Links:

-

Project Website: https://arcadedb.com

-

Source Repository: https://github.com/ArcadeData/arcadedb

-

Latest Release: 25.4.1

-

2. Introduction

2.1. What is ArcadeDB?

ArcadeDB is the new generation of DBMS (DataBase Management System) that runs pretty much on every hardware/software configuration. ArcadeDB is multi-model, which means it can work with graphs, documents as well as other models of data, and doing so extremely fast.

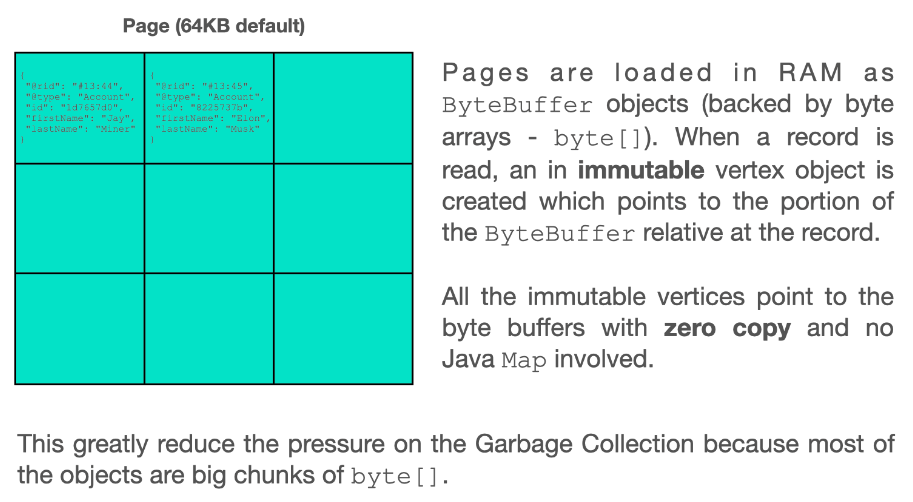

How can it be so fast?

ArcadeDB is written in LLJ ("Low-Level-Java"), that means it’s written in Java (Java8+), but without using a high-level API. The result is that ArcadeDB does not allocate many objects at run-time on the heap, so the garbage collection does not need to act regularly, only rarely. At the same time, it is highly portable and leverages the hyper optimized Java Virtual Machine*. Furthermore, the kernel is built to be efficient on multi-core CPUs by using novel mechanical sympathy techniques.

ArcadeDB is a native graph database:

-

No more "Joins": relationships are physical links to records

-

Traverses parts of, or entire trees and graphs of records in milliseconds

-

Traversing speed is independent from the database size

Cloud DBMS

ArcadeDB was born in the cloud. Even though you can run ArcadeDB as embedded and in an on-premise setup, you can spin an ArcadeDB server/cluster in a few seconds with Docker, Kubernetes, Amazon AWS (coming soon), or Microsoft Azure (coming soon).

Is ArcadeDB FREE?

ArcadeDB Community Edition is really FREE for any purpose and thus released under the Apache 2.0 license. We love to know about your project with ArcadeDB and any contributions back to ArcadeDB’s open community (reports, patches, test cases, documentations, etc) are welcome.

Ask yourself: which is more likely to have better quality? A DBMS created and tested by a handful of developers in isolation, or one tested by thousands of developers globally? When code is public, everyone can scrutinize, test, report and resolve issues. All things open source moves faster compared to the proprietary world.

2.2. Multi Model

The ArcadeDB engine supports Graph, Document, Key/Value, Search-Engine, Time-Series (🚧), and Vector-Embedding (🚧) models, so you can use ArcadeDB as a replacement for a product in any of these categories. However, the main reason why users choose ArcadeDB is because of its true Multi-Model DBMS ability, which combines all the features of the above models into one core. This is not just interfaces to the database engine, but rather the engine itself was built to support all models. This is also the main difference to other multi-model DBMSs, as they implement an additional layer with an API, which mimics additional models. However, under the hood, they’re truly only one model, therefore they are limited in speed and scalability.

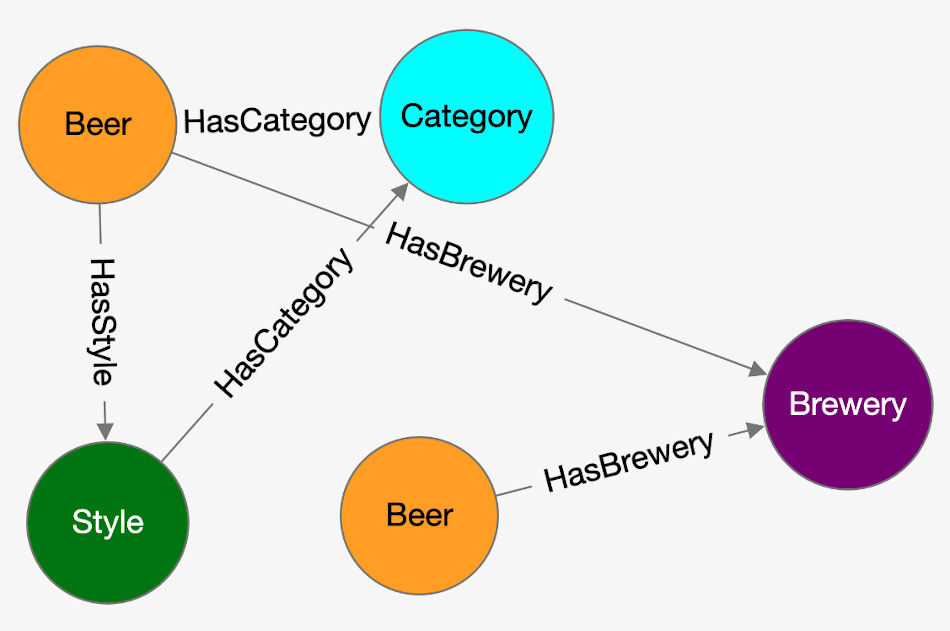

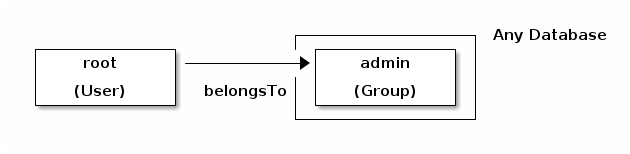

2.2.1. Graph Model

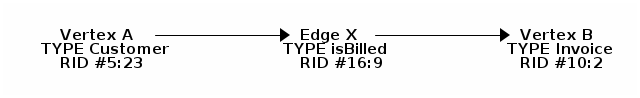

A graph represents a network-like structure consisting of Vertices (also known as Nodes) interconnected by Edges (also known as Arcs). ArcadeDB’s graph model is represented by the concept of a property graph, which defines the following:

-

Vertex - an entity that can be linked with other vertices and has the following mandatory properties:

-

unique identifier

-

set of incoming edges

-

set of outgoing edges

-

label that defines the type of vertex

-

-

Edge - an entity that links two vertices and has the following mandatory properties:

-

unique identifier

-

link to an incoming vertex (also known as head)

-

link to an outgoing vertex (also known as tail)

-

label that defines the type of connection/relationship between head and tail vertex

-

In addition to mandatory properties, each vertex or edge can also hold a set of custom properties. These properties can be defined by users, which can make vertices and edges appear similar to documents. Furthermore, edges are sorted by the reverse order of insertion, meaning the last edge added is the first when listed, cf. "Last In First Out".

In the table below, you can find a comparison between the graph model, the relational data model, and the ArcadeDB graph model:

| Relational Model | Graph Model | ArcadeDB Graph Model |

|---|---|---|

Table |

Vertex and Edge Types |

Type |

Row |

Vertex |

Vertex |

Column |

Vertex and Edge property |

Vertex and Edge property |

Relationship |

Edge |

Edge |

2.2.2. Document Model

The data in this model is stored inside documents. A document is a set of key/value pairs (also referred to as fields or properties), where the key allows access to its value. Values can hold primitive data types, embedded documents, or arrays of other values. Documents are not typically forced to have a schema, which can be advantageous, because they remain flexible and easy to modify. Documents are stored in collections, enabling developers to group data as they decide. ArcadeDB uses the concepts of "Types" and "Buckets" as its form of "collections" for grouping documents. This provides several benefits, which we will discuss in further sections of the documentation.

ArcadeDB’s document model also adds the concept of a "Relationship" between documents. With ArcadeDB, you can decide whether to embed documents or link to them directly. When you fetch a document, all the links are automatically resolved by ArcadeDB. This is a major difference to other document databases, like MongoDB or CouchDB, where the developer must handle any and all relationships between the documents herself.

The table below illustrates the comparison between the relational model, the document model, and the ArcadeDB document model:

| Relational Model | Document Model | ArcadeDB Document Model |

|---|---|---|

Table |

Collection |

|

Row |

Document |

Document |

Column |

Key/value pair |

Document property |

Relationship |

not available |

2.2.3. Key/Value Model

This is the simplest model. Everything in the database can be reached by a key, where the values can be simple and complex types. ArcadeDB supports documents and graph elements as values allowing for a richer model, than what you would normally find in the typical key/value model. The usual Key/Value model provides "buckets" to group key/value pairs in different containers. The most typical use cases of the Key/Value Model are:

-

POST the value as payload of the HTTP call →

/<bucket>/<key> -

GET the value as payload from the HTTP call →

/<bucket>/<key> -

DELETE the value by Key, by calling the HTTP call →

/<bucket>/<key>

The table below illustrates the comparison between the relational model, the Key/Value model, and the ArcadeDB Key/Value model:

| Relational Model | Key/Value Model | ArcadeDB Key/Value Model |

|---|---|---|

Table |

Bucket |

|

Row |

Key/Value pair |

Document |

Column |

not available |

Document field or Vertex/Edge property |

Relationship |

not available |

2.2.4. Search-Engine Model

The search engine model is based on a full-text variant of the LSM-Tree index. To index each word, the necessary tokenization is performed by the Apache Lucene library. Such a full-text index is created just like any index in ArcadeDB.

2.2.6. Vector Model

This model uses the hierarchical navigable small world (HNSW) algorithm to index the multi-dimensional vector data. Practically, an extended version of the hnswlib is used. Since the HNSW algorithm is based on a graph, the vectors are stored as compressed arrays inside ArcadeDB’s vertex type, and the proximities are represented by actual edges.

The vector indexing process is configurable, i.e. the distance function,

the number of nearest neighbors during construction (efConstruction) or search (ef),

as well as others can be set, see Additional Settings.

Java Example

HnswVectorIndexRAM<String, float[], Word, Float> hnswIndex = HnswVectorIndexRAM.newBuilder(300, DistanceFunctions.FLOAT_INNER_PRODUCT, words.size())

.withM(16).withEf(200).withEfConstruction(200).build();persistentIndex = hnswIndex.createPersistentIndex(database)//

.withVertexType("Word").withEdgeType("Proximity").withVectorPropertyName("vector").withIdProperty("name").create();

persistentIndex.save();persistentIndex = (HnswVectorIndex) database.getSchema().getIndexByName("Word[name,vector]");List<SearchResult<Vertex, Float>> approximateResults = persistentIndex.findNeighbors(input, k);SQL Example

import database https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.en.300.vec.gz

with distanceFunction = 'cosine', m = 16, ef = 128, efConstruction = 128;In this case the default vertex type used for storing vectors is Node.

SELECT vectorNeighbors('Node[name,vector]','king',3);Additional Settings

When working with vector indexes, the following parameters can be configured:

-

distanceFunction: The distance function to use for similarity calculation. See the table below. -

m: The maximum number of connections per layer in the graph (default: 16) -

ef: Number of nearest neighbors to return during search (default: 10) -

efConstruction: Number of nearest neighbors to consider during index construction (default: 200) -

randomSeed: Random seed for reproducible construction (default: 42)

2.3. Run ArcadeDB

You can run ArcadeDB in the following ways:

-

In the cloud (coming soon), by running an ArcadeDB instance on Amazon AWS, Microsoft Azure, or Google Cloud Engine.

-

On-premise, on your servers, any OS is good. You can run with Docker, Podman, Kubernetes or bare metal.

-

On x86(-64), arm(64), or any other hardware supporting a JRE (Java* Runtime Environment)

-

Embedded, if you develop with a language that runs on the JVM (Java* Virtual Machine)*

To reach the best performance, use ArcadeDB in embedded mode to reach two million insertions per second on common hardware. If you need to scale up with the queries, run a HA (high availability) configuration with at least three servers, and a load balancer in front. Run ArcadeDB with Kubernetes to have an automatic setup of servers in HA with a load balancer upfront.

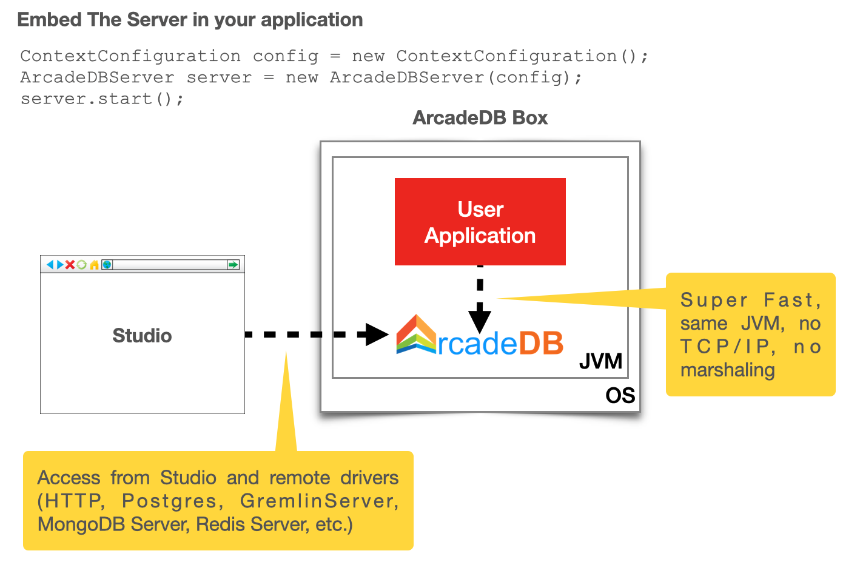

Embedded

This mode is possible only if your application is running in a JVM* (Java* Virtual Machine). In this configuration ArcadeDB runs in the same JVM as your application. In this way you completely avoid the client/server communication cost (TCP/IP, marshalling/unmarshalling, etc.) If the JVM that hosts your application crashes, then also ArcadeDB would crash, but don’t worry, ArcadeDB uses a WAL to recover partially committed transactions. Your data is safe! Check the Embedded Server section.

Client-Server

This is the classic way people use a DBMS, like with relational databases. The ArcadeDB server exposes HTTP/JSON API, so you can connect to ArcadeDB from any language without even using drivers. Take a look at the driver chapter for more information.

High Availability (HA)

You can spin up as many ArcadeDB servers as you want to have a HA setup and scale up with queries that can be executed on any servers. ArcadeDB uses a Raft based election system to guarantee the consistency of the database. For more information look at High Availability.

Binaries

| Linux / Mac | Windows | |

|---|---|---|

|

|

|

|

|

2.3.1. Getting Started

All Platforms

-

Download latest release from Github

-

Unpack

tar -xzf arcadedb-25.4.1-

Change into directory:

cd arcadedb-25.4.1

-

-

Launch server

-

Linux / MacOS:

bin/server.sh -

Windows:

bin\server.bat

-

-

Exit server via CTRL+C

-

Interact with server

-

Studio:

http://localhost:2480 -

Console:

-

Linux / MacOS:

bin/console.sh -

Windows:

bin\console.bat

-

-

Windows via Scoop

Instead of using manual install you can use Scoop installer, instructions are available on their project website.

scoop bucket add extras

scoop install arcadedbThis downloads and installs ArcadeDB on your box and makes following two commands available:

arcadedb-console

arcadedb-serverYou should use these instead of bin\console.bat and bin\server.bat mentioned above.

3. Main Concepts

3.1. Record

A record is the smallest unit you can load from and store in the database. Records come in three types:

-

Document

-

Vertex

-

Edge

Document

Documents are softly typed and are defined by schema types, but you can also use them in a schema-less mode too. Documents handle fields in a flexible manner. You can easily import and export them in JSON format. For example,

{

"name":"Jay",

"surname":"Miner",

"job":"Developer",

"creations":[{

"name":"Amiga 1000",

"company":"Commodore Inc."

},{

"name":"Amiga 500",

"company":"Commodore Inc."

}]

}Vertex

In graph databases the vertices (also: vertexes), or nodes represent the main entity that holds the information. It can be a patient, a company or a product. Vertices are themselves documents with some additional features. This means they can contain embedded records and arbitrary properties exactly like documents. Vertices are connected with other vertices through edges.

Edge

An edge, or arc, is the connection between two vertices. Edges can be unidirectional and bidirectional. One edge can only connect two vertices.

For more information on connecting vertices in general, see Relationships below.

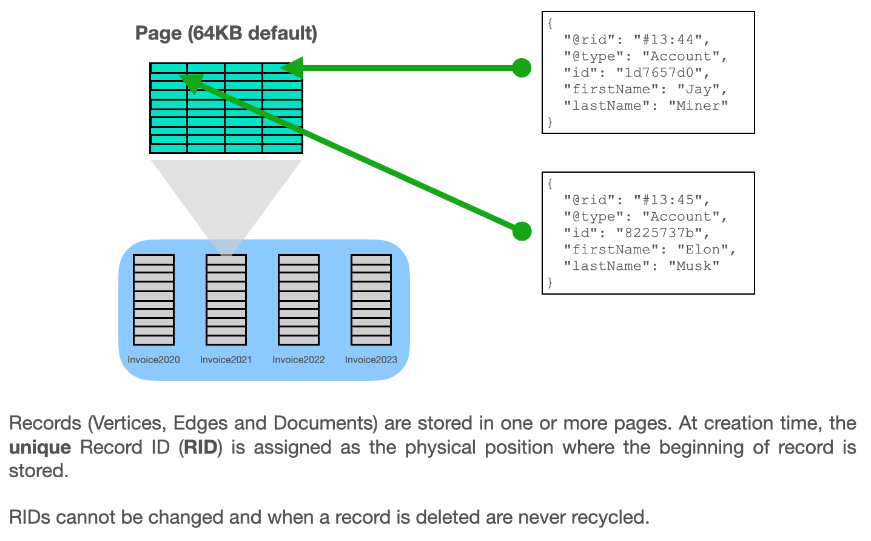

Record ID

When ArcadeDB generates a record, it auto-assigns a unique identifier called a Record ID, RID for short.

The syntax for the RID is the pound symbol (#) with the bucket identifier, colon (:), and the position like so:

#<bucket-identifier>:<record-position>.

-

bucket-identifier: This number indicates the bucket id to which the record belongs. Positive numbers in the bucket identifier indicate persistent records. You can have up to 2,147,483,648 buckets in a database.

-

record-position: This number defines the absolute position of the record in the bucket.

A special case is #-1:-1 symbolizing the null RID.

The prefix character # is mandatory.

|

Each Record ID is immutable, universal, and is never reused. Additionally, records can be accessed directly through their RIDs at O(1) complexity which means the query speed is constant, unaffected by database size. For this reason, you don’t need to create a field to serve as the primary key as you do in relational databases.

Record Retrieval Complexity

Retrieving a record by RID is of complexity O(1).

This is possible as the RID itself encodes both, the file a record is stored in, and the position inside it.

In an RID, i.e. #12:1000000, the bucket identifier (here #12) specifies the record’s associated file,

while the record position (here 1000000) describes the position inside the file.

Bucket files are organized in pages (with default size 64KB) with a maximum number records per page (by default 2048).

To determine the byte position of a record in a bucket file,

the rounded down quotient of record position and maximum records per page yields the page (here ⌊1000000 / 2048⌋),

and the remainder gives the position on the page (here ⌊1000000 % 2048⌋).

In pseudo-code this computation is given by:

int pageId = floor(rid.getPosition() / maxRecordsInPage);

int positionInPage = floor(rid.getPosition() % maxRecordsInPage);3.2. Types

The concept of type is taken from the Object Oriented Programming paradigm, sometimes known as "Class". In ArcadeDB, types define records. It is closest to the concept of a "Table" in relational databases and a "Class" in an object database.

Types can be schema-less, schema-full, or a mix. They can inherit from other types, creating a tree of types. Inheritance, in this context, means that a subtype extends a parent type, inheriting all of its properties and attributes. Practically, this is done by extending a type or setting a super-type.

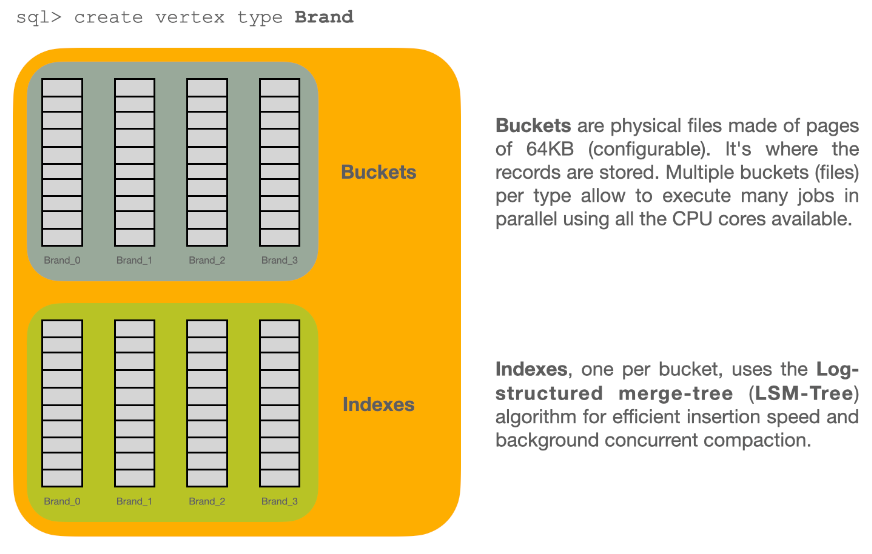

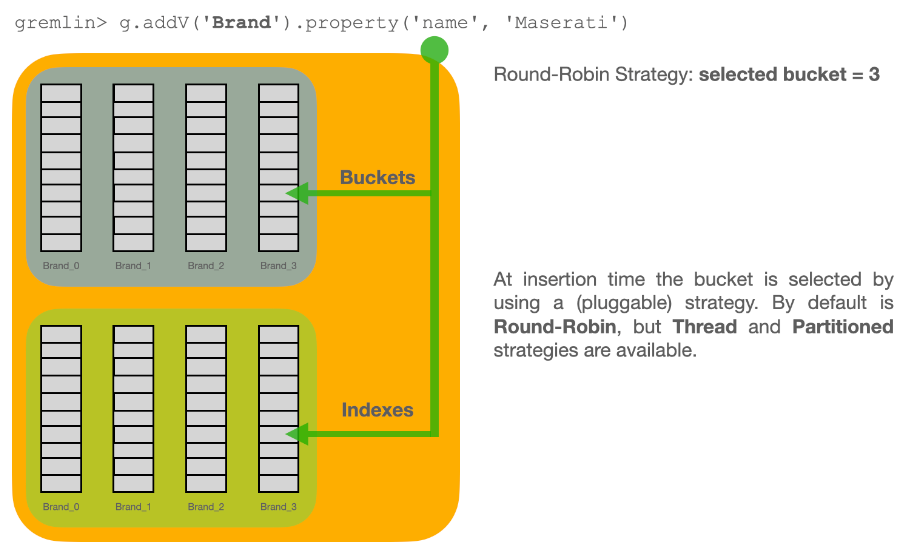

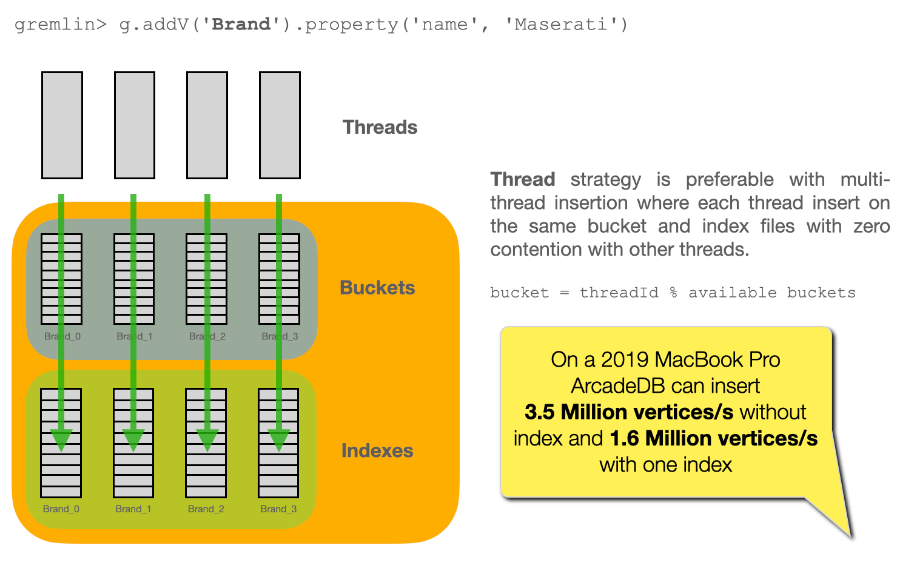

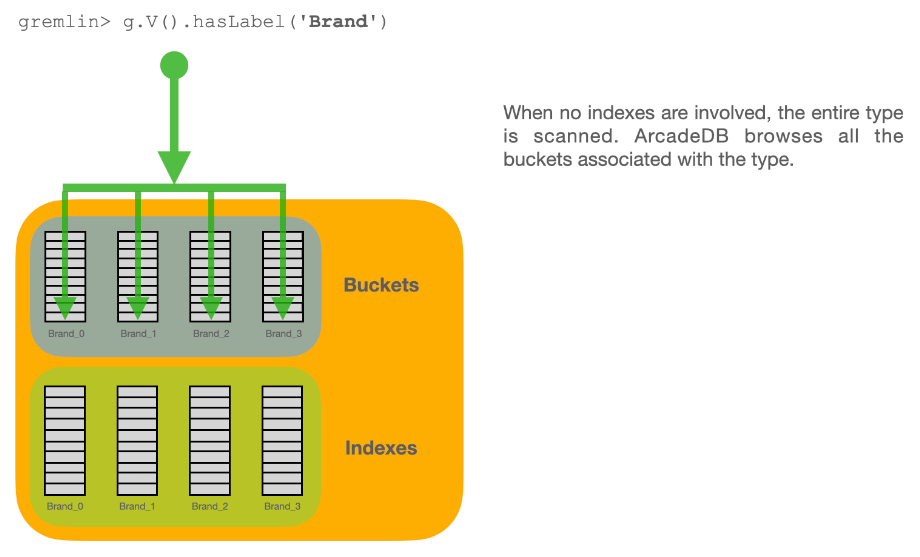

Each type has its own buckets (data files). A type can support multiple buckets. When you execute a query against a type, it automatically fetches from all the buckets that are part of the type. When you create a new record, ArcadeDB selects the bucket to store it in using a configurable strategy.

As a default, ArcadeDB creates one bucket per type, but can be configured to for example to as many cores (processors) the host machine has, see typeDefaultBuckets.

In this, CRUD operations can go full speed in parallel with zero contention between CPUs and/or cores.

Having many buckets per type means having more files at file system level.

Check if your operating system has any limitation with the number of files supported and opened at the same time (ulimit for Unix-like systems).

You can query the defined types by executing the following SQL query: select from schema:types.

|

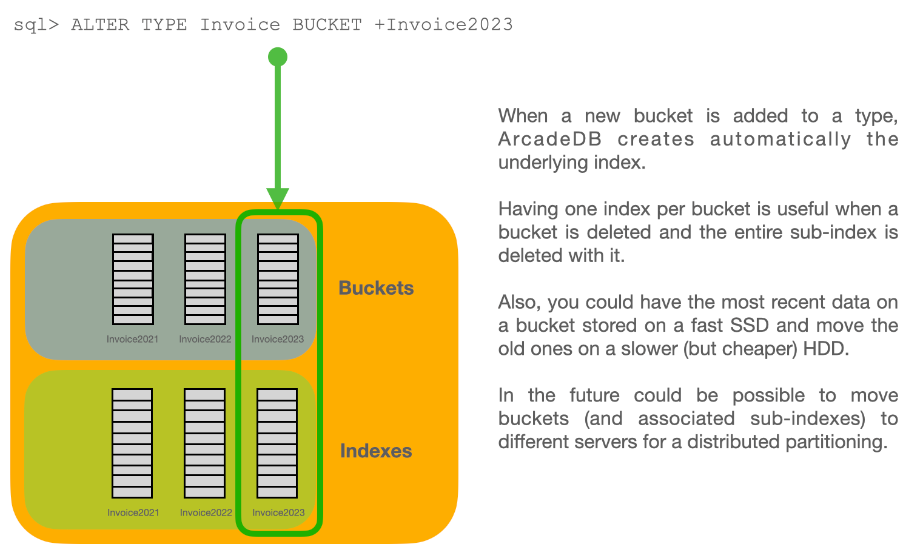

3.3. Buckets

Where types provide you with a logical framework for organizing data, buckets provide physical or in-memory space in which ArcadeDB actually stores the data. Each bucket is one file at file system level. It is comparable to the "collection" in Document databases, the "table" in Relational databases and the "cluster" in OrientDB. You can have up to 2,147,483,648 buckets in a database.

A bucket can only be part of one type. This means two types can not share the same bucket. Also, sub-types have their separate buckets from their super-types.

When you create a new type, the CREATE TYPE statement automatically creates the physical buckets (files) that serve as the default location in which to store data for that type.

ArcadeDB forms the bucket names by using the type name + underscore + a sequential number starting from 0. For example, the first bucket for the type Beer will be Beer_0 and the correspondent file in the file system will be Beer_0.31.65536.bucket.

By default ArcadeDB creates one bucket per type.

For massive inserts, performance can be improved by creating additional buckets and hence taking advantage of parallelism, i.e. by creating one bucket for each CPU core on the server.

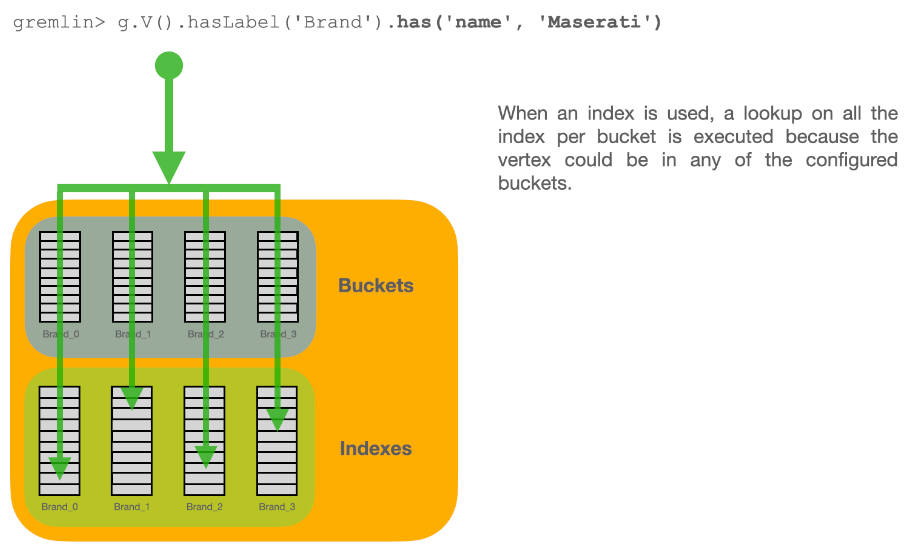

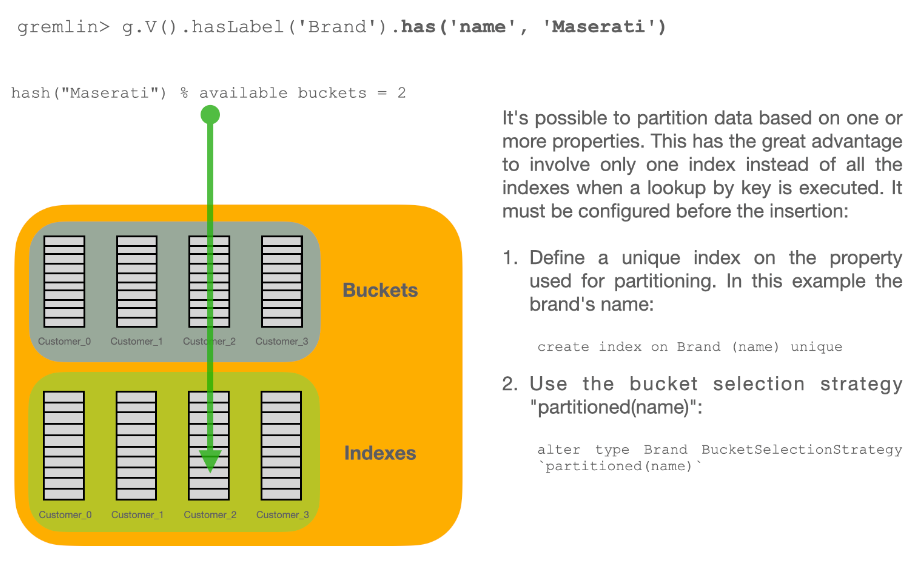

Types vs. Buckets in Queries

The combination of types and buckets is very powerful and has a number of use cases. In most case, you can work with types and you will be fine. But if you are able to split your database into multiple buckets, you could address a specific bucket based instead of the entire type. By wisely using the buckets to divide your database in a way that help you with the retrieval means zero or less use of indexes. Indexes slow down insertion and take space on disk and RAM. In most cases you need indexes to speed up your queries, but in some use cases you could totally or partially avoid using indexes and still having good performance on queries.

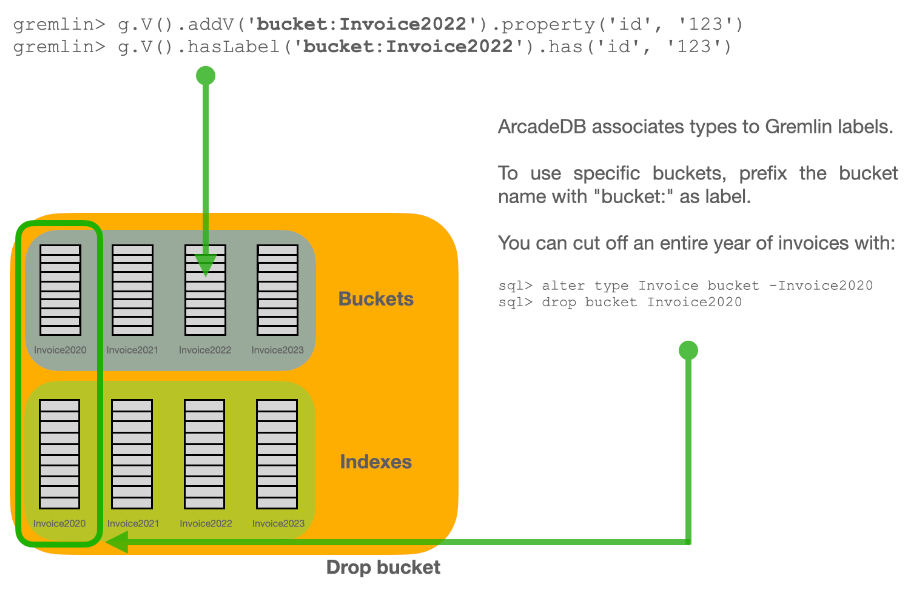

One bucket per period

Consider an example where you create a type Invoice, with one bucket per year. Invoice_2015 and Invoice_2016.

You can query all invoices using the type as a target with the SELECT statement.

SELECT FROM InvoiceIn addition to this, you can filter the result set by the year.

The type Invoice includes a year field, you can filter it through the WHERE clause.

SELECT FROM Invoice WHERE year = 2016You can also query specific records from a single bucket.

By splitting the type Invoice across multiple buckets, (that is, one per year in our example), you can optimize the query by narrowing the potential result set.

SELECT FROM BUCKET:Invoice_2016By using the explicit bucket instead of the logical type, this query runs significantly faster, because ArcadeDB can narrow the search to the targeted bucket.

No index is needed on the year, because all the invoices for year 2016 will be stored in the bucket Invoice_2016 by the application.

One bucket per location

Like with the example above, we could split our records by location creating one bucket per location. Example:

CREATE BUCKET Customer_Europe

CREATE BUCKET Customer_Americas

CREATE BUCKET Customer_Asia

CREATE BUCKET Customer_Other

CREATE VERTEX TYPE Customer BUCKET Customer_Europe,Customer_Americas,Customer_Asia,Customer_OtherHere we are using the graph model by creating a vertex type, but it’s the same with documents.

Use CREATE DOCUMENT TYPE instead.

Now in your application, store the vertices or documents in the right bucket, based on the location of such customer. You can use any API and set the bucket. If you’re using SQL, this is the way you can insert a new customer into a specific bucket.

INSERT INTO BUCKET:Customer_Europe CONTENT { firstName: 'Enzo', lastName: 'Ferrari' }Since a bucket can only be part of one type, when you use the bucket notation with SQL, the type is inferred from the bucket, "Customer" in this case.

When you’re looking for customers based in Europe, you could execute this query:

SELECT FROM BUCKET:Customer_EuropeYou can go even more specific by creating a bucket per country, not just for continent, and query from that bucket. Example:

CREATE BUCKET 'Customer_Europe_Italy'

CREATE BUCKET 'Customer_Europe_Spain'Now get all the customers that live in Italy:

SELECT FROM BUCKET:Customer_Europe_ItalyYou can also specify a list of buckets in your query. This is the query to retrieve both Italian and Spanish customers.

SELECT FROM BUCKET:[Customer_Europe_Italy,Customer_Europe_Spain]3.4. Relationships

ArcadeDB supports three kinds of relationships: connections, referenced and embedded. It can manage relationships in a schema-full or schema-less scenario.

Graph Connections

As a graph database, spanning edges between vertices is one way to express a connections between records. This is the graph model’s natural way of relationsships and traversable by the SQL, Gremlin, and Cypher query languages. Internally, ArcadeDB deposes a direct (referenced) relationship for edge-wise connected vertices to ensure fast graph traversals.

Example

In ArcadeDB’s SQL, edges are created via the CREATE EDGE command.

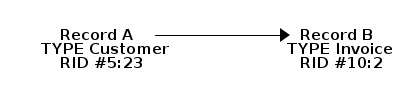

Referenced Relationships

In Relational databases, tables are linked through JOIN commands, which can prove costly on computing resources.

ArcadeDB manages relationships natively without computing a JOIN but storing a direct LINK to the target object of the relationship. This boosts the load speed for the entire graph of connected objects, such as in graph and object database systems.

Example

Note, that referenced relationships differ from edges: references are properties connecting any record while edges are types connecting vertices, and particularly, graph traversal is only applicable to edges.

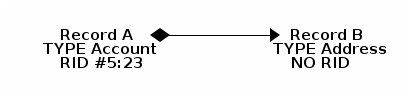

Embedded Relationships

When using Embedded relationships, ArcadeDB stores the relationship within the record that embeds it. These relationships are stronger than Reference relationships. You can represent it as a UML Composition relationship.

Embedded records do not have their own RID, so it can’t be referenced through other records. It is only accessible directly through the container record. Furthermore, an embedded record is stored inside the embedding record, and not in an embedded record type’s bucket. Hence, in the event that you delete the container record, the embedded record is also deleted. For example,

Here, record A contains the entirety of record B in the property address.

You can reach record B only by traversing the container record.

For example,

SELECT FROM Account WHERE address.city = 'Rome'1:1 and n:1 Embedded Relationships

ArcadeDB expresses relationships of these kinds using the EMBEDDED type.

1:n and n:n Embedded Relationships

ArcadeDB expresses relationships of these kinds using a list or a map of links, such as:

-

LISTAn ordered list of records. -

MAPAn ordered map of records as the value and a string as the key, it doesn’t accept duplicate keys.

Inverse Relationships

In ArcadeDB, all edges in the graph model are bidirectional. This differs from the document model, where relationships are always unidirectional, requiring the developer to maintain data integrity. In addition, ArcadeDB automatically maintains the consistency of all bidirectional relationships.

Edge Constraints

ArcadeDB supports edge constraints, which means limiting the admissible vertex types that can be connected by an edge type.

To this end the implicit metadata properties @in and @out need to be made explicit by creating them.

For example, for an edge type HasParts that is supposed to connect only from vertices of type Product to vertices of type Component, this can be schemed by:

CREATE EDGE TYPE HasParts;

CREATE PROPERTY HasParts.`@out` link OF Product;

CREATE PROPERTY HasParts.`@in` link OF Component;Relationship Traversal Complexity

As a native graph database, ArcadeDB supports index free adjacency. This means constant graph traversal complexity of O(1), independent of the graph expanse (database size).

To traverse a graph structure, one needs to follow references stored by the current record. These references are always stored as RIDs, and are not only pointers to incoming and outgoing edges, but also to connected vertices. Internally, references are managed by a stack (also known as LIFO), which allows to get the latest insertion first. As not only edges, but also connected vertices are stored, neighboring nodes can be reached directly, particularly without going via the connecting edge. This is useful if edges are used purely to connect vertices and do not carry i.e. properties themselves.

3.5. Database

Each server or Java VM can handle multiple database instances, but the database name must be unique.

Database URL

ArcadeDB uses its own URL format, of engine and database name as <engine>:<db-name>.

The embedded engine is the default and can be omitted.

To open a database on the local file system you can use directly the path as URL.

Database Usage

You must always close the database once you finish working on it.

ArcadeDB automatically closes all opened databases, when the process dies gracefully (not by killing it by force).

This is assured if the operating system allows a graceful shutdown.

For example, on Unix/Linux systems using SIGTERM, or in Docker exit code 143 instead of SIGKILL, or in Docker exit code 137.

|

3.6. Transactions

A transaction comprises a unit of work performed within a database management system (or similar system) against a database, and treated in a coherent and reliable way independent of other transactions. Transactions in a database environment have two main purposes:

-

to provide reliable units of work that allow correct recovery from failures and keep a database consistent even in cases of system failure, when execution stops (completely or partially) and many operations upon a database remain uncompleted, with unclear status

-

to provide isolation between programs accessing a database concurrently. If this isolation is not provided, the program’s outcome are possibly erroneous.

A database transaction, by definition, must be atomic, consistent, isolated and durable. Database practitioners often refer to these properties of database transactions using the acronym ACID). - Wikipedia

ArcadeDB is an ACID compliant DBMS.

| ArcadeDB keeps the transaction in the host’s RAM, so the transaction size is affected by the available RAM (Heap memory) on JVM. For transactions involving many records, consider to split it in multiple transactions. |

ACID Properties

Atomicity

"Atomicity requires that each transaction is 'all or nothing': if one part of the transaction fails, the entire transaction fails, and the database state is left unchanged. An atomic system must guarantee atomicity in each and every situation, including power failures, errors, and crashes. To the outside world, a committed transaction appears (by its effects on the database) to be indivisible ("atomic"), and an aborted transaction does not happen." - Wikipedia

Consistency

"The consistency property ensures that any transaction will bring the database from one valid state to another. Any data written to the database must be valid according to all defined rules, including but not limited to constraints, cascades, triggers, and any combination thereof. This does not guarantee correctness of the transaction in all ways the application programmer might have wanted (that is the responsibility of application-level code) but merely that any programming errors do not violate any defined rules." - Wikipedia

ArcadeDB uses the MVCC to assure consistency by versioning the page where the record are stored.

Look at this example:

| Sequence | Client/Thread 1 | Client/Thread 2 | Version of page containing record X |

|---|---|---|---|

1 |

Begin of Transaction |

||

2 |

read(x) |

10 |

|

3 |

Begin of Transaction |

||

4 |

read(x) |

10 |

|

5 |

write(x) |

10 |

|

6 |

commit |

10 → 11 |

|

7 |

write(x) |

10 |

|

8 |

commit |

10 → 11 = Error, in database x already is at 11 |

Isolation

"The isolation property ensures that the concurrent execution of transactions results in a system state that would be obtained if transactions were executed serially, i.e. one after the other. Providing isolation is the main goal of concurrency control. Depending on concurrency control method, the effects of an incomplete transaction might not even be visible to another transaction." - Wikipedia

The SQL standard defines the following phenomena which are prohibited at various levels are:

-

Dirty Read: a transaction reads data written by a concurrent uncommitted transaction. This is never possible with ArcadeDB.

-

Non Repeatable Read: a transaction re-reads data it has previously read and finds that data has been modified by another transaction (that committed since the initial read).

-

Phantom Read: a transaction re-executes a query returning a set of rows that satisfy a search condition and finds that the set of rows satisfying the condition has changed due to another recently-committed transaction. This happens also when records are deleted or inserted during the transaction and they could become visible during the transaction.

The SQL standard transaction isolation levels are described in the table below:

| Isolation Level | Dirty Read | Non repeatable Read | Phantom Read |

|---|---|---|---|

|

Not possible |

Possible |

Possible |

|

Not possible |

Not possible |

Possible |

The SQL SERIALIZABLE level is not supported by ArcadeDB.

Using remote access all the commands are executed on the server, so out of transaction scope.

Look below for more information.

Look at these examples:

| Sequence | Client/Thread 1 | Client/Thread 2 |

|---|---|---|

1 |

Begin of Transaction |

|

2 |

read(x) |

|

3 |

Begin of Transaction |

|

4 |

read(x) |

|

5 |

write(x) |

|

6 |

commit |

|

7 |

read(x) |

|

8 |

commit |

At operation 7 the client 1 continues to read the same version of x read in operation 2.

| Sequence | Client/Thread 1 | Client/Thread 2 |

|---|---|---|

1 |

Begin of Transaction |

|

2 |

read(x) |

|

3 |

Begin of Transaction |

|

4 |

read(y) |

|

5 |

write(y) |

|

6 |

commit |

|

7 |

read(y) |

|

8 |

commit |

At operation 7 the client 1 reads the version of y which was written at operation 6 by client 2. This is because it never reads y before.

Durability

"Durability means that once a transaction has been committed, it will remain so, even in the event of power loss, crashes, or errors. In a relational database, for instance, once a group of SQL statements execute, the results need to be stored permanently (even if the database crashes immediately thereafter). To defend against power loss, transactions (or their effects) must be recorded in a non-volatile memory." - Wikipedia

Fail-over

An ArcadeDB instance can fail for several reasons:

-

HW problems, such as loss of power or disk error

-

SW problems, such as a operating system crash

-

Application problems, such as a bug that crashes your application that is connected to the ArcadeDB engine.

You can use the ArcadeDB engine directly in the same process of your application. This gives superior performance due to the lack of inter-process communication. In this case, should your application crash (for any reason), the ArcadeDB engine also crashes.

If you’re connected to an ArcadeDB server remotely, and if your application crashes but the engine continues to work, any pending transaction owned by the client will be rolled back.

Auto-recovery

At start-up the ArcadeDB engine checks to if it is restarting from a crash. In this case, the auto-recovery phase starts which rolls back all pending transactions.

ArcadeDB has different levels of durability based on storage type, configuration and settings.

Optimistic Transaction

This mode uses the well known Multi Version Control System MVCC by allowing multiple reads and writes on the same records.

The integrity check is made on commit.

If the record has been saved by another transaction in the interim, then an ConcurrentModificationException will be thrown.

The application can choose either to repeat the transaction or abort it.

| ArcadeDB keeps the whole transaction in the host’s RAM, so the transaction size is affected by the available RAM (Heap) memory on JVM. For transactions involving many records, consider to split it in multiple transactions. |

Nested transactions and propagation

ArcadeDB does support nested transaction.

If a begin() is called after a transaction is already begun, then the new transaction is the current one until commit or rollback.

When this nested transaction is completed, the previous transaction becomes the current transaction again.

3.7. Inheritance

Unlike many object-relational mapping tools, ArcadeDB does not split documents between different types. Each document resides in one or a number of buckets associated with its specific type. When you execute a query against a type that has subtypes, ArcadeDB searches the buckets of the target type and all subtypes.

Declaring Inheritance in Schema

In developing your application, bear in mind that ArcadeDB needs to know the type inheritance relationship.

For example,

DocumentType account = database.getSchema().createDocumentType("Account");

DocumentType company = database.getSchema().createDocumentType("Company").addSuperType(account);Using Polymorphic Queries

By default, ArcadeDB treats all queries as polymorphic. Using the example above, you can run the following query from the console:

SELECT FROM Account WHERE name.toUpperCase() = 'GOOGLE'This query returns all instances of the types Account and Company that have a property name that matches Google.

How Inheritance Works

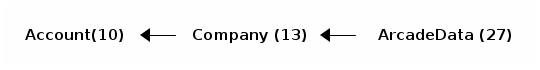

Consider an example, where you have three types, listed here with the bucket identifier in the parentheses.

By default, ArcadeDB creates a separate bucket for each type.

It indicates this bucket by the defaultBucketId property in a type and indicates the bucket used by default when not specified.

However, a type has a property bucketIds, (as int[]), that contains all the buckets able to contain the records of that type. The properties bucketIds and defaultBucketId are the same by default.

When you execute a query against a type, ArcadeDB limits the result-sets to only the records of the buckets contained in the bucketIds property.

For example,

SELECT FROM Account WHERE name.toUpperCase() = 'GOOGLE'This query returns all the records with the name property set to GOOGLE from all three types, given that the base type Account was specified.

For the type Account, ArcadeDB searches inside the buckets 10, 13 and 27, following the inheritance specified in the schema.

3.8. Schema

ArcadeDB supports schema-less, schema-full and hybrid operation. This means for all types that have a declared schema it is applied. Minimally, a type (document, vertex or edge) needs to be declared in a database to be able to insert to, i.e. CREATE TYPE (SQL) and ALTER TYPE (SQL). Beyond the type, declaration properties with or without constraints, can be declared, i.e. CREATE PROPERTY (SQL) and ALTER PROPERTY (SQL). Inserting into a declared type, all datasets are accepted (even with additional undeclared properties) as long as no property constraints are violated.

3.9. Indexes

ArcadeDB indexes are built by using the LSM Tree algorithm.

3.9.1. LSM Tree algorithm

LSM tree is a type of data structure that is used to store and retrieve data efficiently. It works by organizing data in a tree-like structure, where each node in the tree represents a certain range of data.

Here’s how it works:

-

When you want to store a piece of data in the LSM tree, it first goes into a special part of the tree called a "write buffer." The write buffer is like a temporary storage area where new data is kept until it’s ready to be added to the tree.

-

When the write buffer gets full, the LSM tree will "flush" the data from the write buffer into the main part of the tree. This is done by creating a new node in the tree and adding the data from the write buffer to it.

-

As more and more data is added to the tree, it will eventually become too large to be stored in memory (this is known as "overflowing"). When this happens, the LSM tree will start to "compact" the data by moving some of it to disk storage. This allows the tree to continue growing without running out of memory.

-

When you want to retrieve a piece of data from the LSM tree, the algorithm will search for it in the write buffer, the main part of the tree, and any data that has been compacted to disk storage. If the data is found, it will be returned to you

3.9.2. LSM Tree vs B+Tree

B+Tree is the most common algorithm used by relational DBMSs. What are the differences?

-

LSM tree and B+ tree are both data structures that are commonly used to store and retrieve data efficiently. Here are some of the main advantages of LSM tree over B+ tree:

-

LSM tree is more efficient for writes: LSM tree uses a write buffer to temporarily store new data, which allows it to batch writes and reduce the number of disk accesses required. This can make it faster than B+ tree for inserting large amounts of data.

-

LSM tree is more efficient for compaction: Because LSM tree stores data in a sorted fashion, it can compact data more efficiently by simply merging sorted data sets. B+ tree, on the other hand, requires more complex rebalancing operations when compacting data.

-

LSM tree is more space-efficient: LSM tree stores data in a compact, sorted format, which can make it more space-efficient than B+ tree. This can be especially useful when storing large amounts of data on disk.

-

However, there are also some potential disadvantages of LSM tree compared to B+ tree. For example, B+ tree may be faster for queries that require range scans or random access, and it may be easier to implement in some cases.

If you’re interested to ArcadeDB’s LSM-Tree index implementation detail, look at LSM-Tree

4. Server

4.1. Server

To start ArcadeDB as a server run the script server.sh under the bin directory of ArcadeDB distribution. If you’re using MS Windows OS, replace server.sh with server.bat.

$ bin/server.sh

█████╗ ██████╗ ██████╗ █████╗ ██████╗ ███████╗██████╗ ██████╗

██╔══██╗██╔══██╗██╔════╝██╔══██╗██╔══██╗██╔════╝██╔══██╗██╔══██╗

███████║██████╔╝██║ ███████║██║ ██║█████╗ ██║ ██║██████╔╝

██╔══██║██╔══██╗██║ ██╔══██║██║ ██║██╔══╝ ██║ ██║██╔══██╗

██║ ██║██║ ██║╚██████╗██║ ██║██████╔╝███████╗██████╔╝██████╔╝

╚═╝ ╚═╝╚═╝ ╚═╝ ╚═════╝╚═╝ ╚═╝╚═════╝ ╚══════╝╚═════╝ ╚═════╝

PLAY WITH DATA arcadedb.com

2025-05-02 21:29:07.772 INFO [ArcadeDBServer] <ArcadeDB_0> ArcadeDB Server v25.4.1 is starting up...

2025-05-02 21:29:07.776 INFO [ArcadeDBServer] <ArcadeDB_0> Running on Mac OS X 15.4.1 - OpenJDK 64-Bit Server VM 21.0.7 (Homebrew)

2025-05-02 21:29:07.778 INFO [ArcadeDBServer] <ArcadeDB_0> Starting ArcadeDB Server in development mode with plugins [] ...

2025-05-02 21:29:07.814 INFO [ArcadeDBServer] <ArcadeDB_0> - Metrics Collection Started...

+--------------------------------------------------------------------+

| WARNING: FIRST RUN CONFIGURATION |

+--------------------------------------------------------------------+

| This is the first time the server is running. Please type a |

| password of your choice for the 'root' user or leave it blank |

| to auto-generate it. |

| |

| To avoid this message set the environment variable or JVM |

| setting `arcadedb.server.rootPassword` to the root password to use.|

+--------------------------------------------------------------------+

Root password [BLANK=auto generate it]: *The first time the server is running, the root password must be inserted and confirmed.

The hash (+salt) of the inserted password will be stored in the file config/server-users.json.

The password length must be between 8 and 256 characters.

To know more about this topic, look at Security.

Delete this file and restart the server to reinsert the password for server’s root user.

The default rules of security are pretty basic. You can implement your own security policy. Check the Security Policy.

You can skip the request for the password by passing it as a setting. Example:

-Darcadedb.server.rootPassword=this_is_a_passwordAlternatively the password can be passed file-based. Example:

-Darcadedb.server.rootPasswordPath=/run/secrets/rootwhich is particularly useful for container-based deployments.

| The password file is a plain-text file and should not contain any line breaks / new lines. |

Once inserted the password for the root user, you should see this output.

Root password [BLANK=auto generate it]: *********

*Please type the root password for confirmation (copy and paste will not work): *********

2025-05-02 21:29:56.571 INFO [HttpServer] <ArcadeDB_0> - Starting HTTP Server (host=0.0.0.0 port=[I@2b48a640 httpsPort=[I@1e683a3e)...

2025-05-02 21:29:56.593 INFO [undertow] starting server: Undertow - 2.3.18.Final

2025-05-02 21:29:56.596 INFO [xnio] XNIO version 3.8.16.Final

2025-05-02 21:29:56.599 INFO [nio] XNIO NIO Implementation Version 3.8.16.Final

2025-05-02 21:29:56.611 INFO [threads] JBoss Threads version 3.5.0.Final

2025-05-02 21:29:56.654 INFO [HttpServer] <ArcadeDB_0> - HTTP Server started (host=0.0.0.0 port=2480 httpsPort=2490)

2025-05-02 21:29:56.770 INFO [ArcadeDBServer] <ArcadeDB_0> Available query languages: [sqlscript, mongo, gremlin, java, cypher, js, graphql, sql]

2025-05-02 21:29:56.771 INFO [ArcadeDBServer] <ArcadeDB_0> ArcadeDB Server started in 'development' mode (CPUs=8 MAXRAM=4,00GB)

2025-05-02 21:29:56.772 INFO [ArcadeDBServer] <ArcadeDB_0> Studio web tool available at http://192.168.1.108:2480By default, the following components start with the server:

-

JMX Metrics, to monitor server performance and statistics (served via port 9999).

-

HTTP Server, that listens on port 2480 by default. If port 2480 is already occupied, then the next is taken up to 2489.

In the output above, the name ArcadeDB_0 is the server name.

By default, ArcadeDB_0 is used.

To specify a different name define it with the setting server.name, example:

$ bin/server.sh -Darcadedb.server.name=ArcadeDB_Europe_0In a high availability (HA) configuration, it’s mandatory that all the servers in an cluster have different names.

4.1.1. Start server hint

To start the server from a location different than the ArcadeDB folder,

for example, if starting the server as a service,

set the environment variable ARCADEDB_HOME to the ArcadeDB folder:

$ export ARCADEDB_HOME=/path/to/arcadedb4.1.2. Server modes

The server can be started in one of three modes, which affect the studio and logging:

| Mode | Studio | Logging |

|---|---|---|

|

Yes |

Detailed |

|

Yes |

Brief |

|

No |

Brief |

The mode is controlled by the server.mode setting with a default mode development.

4.1.3. Create default database(s)

Instead of starting a server and then connect to it, to create the default databases, ArcadeDB Server takes an initial default databases list by using the setting server.defaultDatabases.

$ bin/server.sh "-Darcadedb.server.defaultDatabases=Universe[albert:einstein]"With the example above the database "Universe" will be created if doesn’t exist, with user "albert", password "einstein".

Due to the use of [], the command line argument needs to be wrapped in quotes.

|

A default database without users still needs to include empty brackets, ie: -Darcadedb.server.defaultDatabases=Multiverse[]

|

Once the server is started, multiple clients can be connected to the server by using one of the supported protocols:

4.1.4. Logging

The log files are created in the folder ./log with the filenames arcadedb.log.X,

where X is a number between 0 to 9, set up for log rotate.

The current log file has the number 0, and is rotated based on server starts or file size.

By default ArcadeDB does not log debug messages into the console and file. You can change this settings by editing the file config/arcadedb-log.properties. The file is a standard logging configuration file.

The default configuration is the following.

1 handlers = java.util.logging.ConsoleHandler, java.util.logging.FileHandler

2 .level = INFO

3 com.arcadedb.level = INFO

4 java.util.logging.ConsoleHandler.level = INFO

5 java.util.logging.ConsoleHandler.formatter = com.arcadedb.utility.AnsiLogFormatter

6 java.util.logging.FileHandler.level = INFO

7 java.util.logging.FileHandler.pattern=./log/arcadedb.log

8 java.util.logging.FileHandler.formatter = com.arcadedb.log.LogFormatter

9 java.util.logging.FileHandler.limit=100000000

10 java.util.logging.FileHandler.count=10Where:

-

Line 1 contains 2 loggers, the console and the file. This means logs will be written in both console (process output) and configured file (see line 7)

-

Line 2 sets INFO (information) as the default logging level for all the Java classes between

FINER,FINE,INFO,WARNING,SEVERE -

Line 3 is as (line 2) but sets the level for ArcadeDB package only

SEVERE -

Line 4 sets the minimum level the console logger filters the log file (below

INFOlevel will be discarded) -

Line 5 sets the formatter used for the console. The

AnsiLogFormattersupports ANSI color codes -

Line 6 sets the minimum level the file logger filters the log file (below

INFOlevel will be discarded) -

Line 7 sets the path where to write the log file (the file will have a counter suffix, see line 10)

-

Line 8 sets the formatter used for the file

-

Line 9 sets the maximum file size for the log, before creating a new file. By default it is 100MB

-

Line 10 sets the number of files to keep in the directory. By default it is 10. This means that after the 10th file, the oldest file will be removed

If you’re running ArcadeDB in embedded mode, make sure you’re using the logging setting by specifying the arcadedb-log.properties file at JVM startup:

$ java ... -Djava.util.logging.config.file=$ARCADEDB_HOME/config/arcadedb-log.properties ...You can also use your own configuration for logging. In this case replace the path above with your own file.

4.1.5. Server Plugins (Extend The Server)

You can extend ArcadeDB server by creating custom plugins. A plugin is a Java class that implements the interface com.arcadedb.server.ServerPlugin:

public interface ServerPlugin {

void startService();

default void stopService() {

}

default void configure(ArcadeDBServer arcadeDBServer, ContextConfiguration configuration) {

}

default void registerAPI(final HttpServer httpServer, final PathHandler routes) {

}

}Once registered, the plugin (see below), ArcadeDB Server will instantiate your plugin class and will call the method configure() passing the server configuration. At startup of the server, the startService() method will be invoked. When the server is shut down, the stopService() will be invoked where you can free any resources used by the plugin. The method registerAPI(), if implemented, will be invoked when the HTTP server is initializing where one’s own HTTP commands can be registered. For more information about how to create custom HTTP commands, look at Custom HTTP commands.

Example:

package com.yourpackage;

public class MyPlugin implements ServerPlugin {

@Override

public void startService() {

System.out.println( "Plugin started" );

}

@Override

public void stopService() {

System.out.println( "Plugin halted" );

}

@Override

default void configure(ArcadeDBServer arcadeDBServer, ContextConfiguration configuration) {

System.out.println( "Plugin configured" );

}

@Override

default void registerAPI(final HttpServer httpServer, final PathHandler routes) {

System.out.println( "Registering HTTP commands" );

}

}To register your plugin, register the name and add your class (with full package name) in

arcadedb.server.plugins setting:

Example:

$ java ... -Darcadedb.server.plugins=MyPlugin:com.yourpackage.MyPlugin ...In case of multiple plugins, use a comma (,) to separate them.

4.1.6. Metrics

The ArcadeDB server can collect, log and publish metrics. To activate the collection of metrics use the setting:

$ ... -Darcadedb.serverMetrics=trueTo log the metrics to the standard output use the setting:

$ ... -Darcadedb.serverMetrics.logging=trueTo publish the metrics as Prometheus via HTTP, add the plugin:

$ ... -Darcadedb.server.plugins="Prometheus:com.arcadedb.metrics.prometheus.PrometheusMetricsPlugin"Then, under http://localhost:2480/prometheus (or the respective ArcadeDB host) the metrics can be requested given server credentials.

For details about the response format see the Prometheus docs.

4.2. Changing Settings

To change the default value of a setting, always put arcadedb. as a prefix. Example:

$ java -Darcadedb.dumpConfigAtStartup=true ...To change the same setting via Java code:

GlobalConfiguration.findByKey("arcadedb.dumpConfigAtStartup").setValue(true);Check the Appendix for all the available settings.

4.2.1. Environment Variables

The server script parses a set of environment variables which are summarized below:

|

JVM location |

|---|---|

|

JVM options |

|

ArcadeDB location |

|

|

|

JVM memory options |

For default values see the server.sh and server.bat scripts.

4.2.2. RAM Configuration

The ArcadeDB server, by default, uses a dynamic allocation for the used RAM. Sometimes you want to limit this to a specific amount. You can define the environment variable ARCADEDB_OPTS_MEMORY to tune the JVM settings for the usage of the RAM.

Example to use 800M fixed RAM for ArcadeDB server:

$ export ARCADEDB_OPTS_MEMORY="-Xms800M -Xmx800M"

$ bin/server.shArcadeDB can run with as little as 16M for RAM. In case you’re running ArcadeDB with less than 800M of RAM, you should set the "low-ram" as profile:

$ export ARCADEDB_OPTS_MEMORY="-Xms128M -Xmx128M"

$ bin/server.sh -Darcadedb.profile=low-ramSetting a profile is like executing a macro that changes multiple settings at once. You can tune them individually, check Settings.

In case of memory latency problems under Linux systems, the following JVM setting can improve performance:

$ export ARCADEDB_OPTS_MEMORY="-XX:+PerfDisableSharedMem"for more information, see https://www.evanjones.ca/jvm-mmap-pause.html

The Java heap memory is by default configured for desktop use; for custom containers, memory configuration can be adapted by:

$ export ARCADEDB_OPTS_MEMORY="-XX:InitialRAMPercentage=50.0 -XX:MaxRAMPercentage=75.0"More information about Java memory configuration see this article.

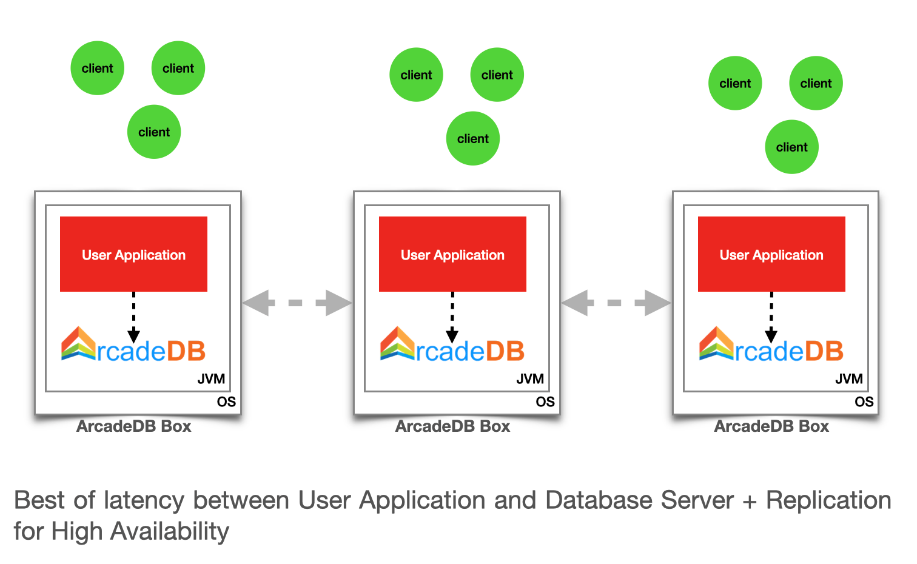

4.3. High Availability

ArcadeDB supports a High Availability mode where multiple servers share the same database via replication.

| All servers of a cluster serve the same databases. |

In order to start an ArcadeDB server with the support for replication, you need to:

-

Enable the HA module by setting the configuration

arcadedb.ha.enabledtotrue -

Define the servers that are part of the clusters (if you are using Kubernetes, look at Kubernetes paragraph). To setup the server list, define the

arcadedb.ha.serverListsetting by separating the servers with commas (,) and using the following format for servers:<hostname/ip-address[:port]>. The default port is2424if not specified. -

Define the local server name by setting the

arcadedb.server.nameconfiguration. Each node must have a unique name. If not specified, the default server name isArcadeDB_0.

Example:

$ bin/server.sh -Darcadedb.ha.enabled=true

-Darcadedb.server.name=FloridaServer

-Darcadedb.ha.serverList=192.168.0.2,192.168.0.1:2424,japan-server:8888The log should look like this:

[HttpServer] <FloridaServer> - HTTP Server started (host=0.0.0.0 port=2480)

[LeaderNetworkListener] <ArcadeDB_0> Listening for replication connections on 127.0.0.1:2424 (protocol v.-1)

[HAServer] <FloridaServer> Unable to find any Leader, start election (cluster=arcadedb configuredServers=1 majorityOfVotes=1)

[HAServer] <FloridaServer> Change election status from DONE to VOTING_FOR_ME

[HAServer] <FloridaServer> Starting election of local server asking for votes from [] (turn=1 retry=0 lastReplicationMessage=-1 configuredServers=1 majorityOfVotes=1)

[HAServer] <FloridaServer> Current server elected as new Leader (turn=1 totalVotes=1 majority=1)

[HAServer] <FloridaServer> Change election status from VOTING_FOR_ME to LEADER_WAITING_FOR_QUORUM

[HAServer] <FloridaServer> Contacting all the servers for the new leadership (turn=1)...At startup, the ArcadeDB server will look for an existent cluster to join based on the configured list of servers, otherwise a new cluster will be created.

In this example we set the server name to FloridaServer.

Every time a server joins a cluster, it starts the process to elect the new leader. If the cluster exists and already contains a Leader, then the existent leader is kept. Every time a server leaves the cluster (because it becomes unreachable), the election process is started again. To know more about the RAFT election process, look at RAFT protocol.

The cluster name by default is "arcadedb", but you can have multiple clusters in the same network.

To specify a custom name, set the configuration arcadedb.ha.clusterName=<name>.

Example: bin/server.sh -Darcadedb.ha.clusterName=projectB

|

4.3.1. Architecture

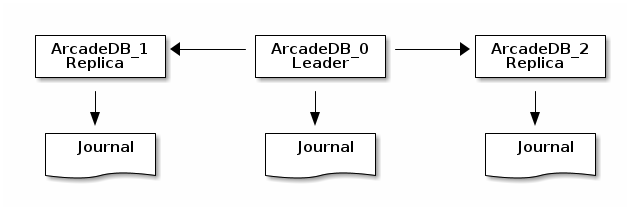

ArcadeDB has a Leader/Replica model by using RAFT consensus for election and replication.

Each server has its own journal. The journal is used in case of recovery of the cluster to get the most updated replica and to align the other nodes. All the write operations must be coordinated by the leader node.

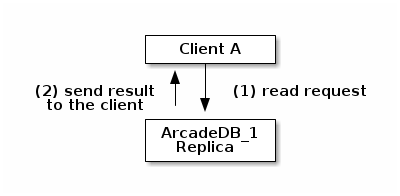

Any read operation, like a query, can be executed by any server in the cluster, while write operations can be executed only by the leader server.

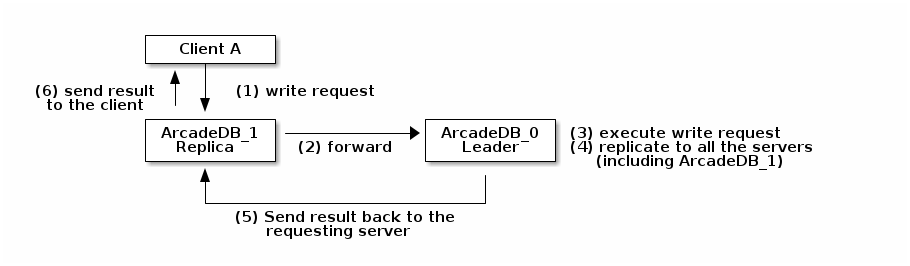

ArcadeDB doesn’t mandate the clients to be connected directly to the leader to execute write operations, but will use the replica server to forward the write request to the leader server. Everything is transparent for the end user where both leader and replica servers can read and write, but internally only the read requests are executed on the connected server. All the write requests will be transparently forwarded to the leader.

Look at the picture below where the client Client A is connected to the replica server ArcadeDB_1 where it executes a write request.

4.3.2. Auto fail-over

ArcadeDB cluster uses a quorum to assure the integrity of the database is maintained across all the servers forming the cluster.

The quorum is set by default to MAJORITY, that means the majority of the servers in the cluster must return the same result to be considered accepted and propagated to all the servers.

The quorum is MAJORITY by default.

You can specify a different quorum by setting the number of servers or none to have no quorum and all to wait the response from all the servers.

Set the configuration arcadedb.ha.quorum=<quorum>.

Example: bin/server.sh -Darcadedb.ha.quorum=all

|

If the configured quorum is not met, the transaction is rollback on all the servers, the database returns to the previous state and a transaction error is thrown to the client.

ArcadeDB manages the fail-over automatically in most of the cases.

Server unreachable

A server can become unreachable for many reasons:

-

The ArcadeDB server process has been terminated

-

The physical or virtual server hosting the ArcadeDB server process has been shut off or is rebooting

-

The physical or virtual server hosting the ArcadeDB server process has network issues and can’t reach one or more of the other servers

-

Network issues that prevent the ArcadeDB server to communicate with the rest of the servers in the cluster

4.3.4. Troubleshooting

Performance: insertion is slow

ArcadeDB uses an optimistic lock approach: if two threads try to update the same page, the first thread wins, the second thread throws a ConcurrentModificationException and forces the client to retry the transaction or fail after a certain number of retries (configurable).

Often this fail/retry mechanism is totally hidden to the developer that executes a transaction via HTTP or via the Java API:

db.transaction( ()-> {

// MY TRANSACTION CODE

});If you are inserting a lot of record in parallel (by using the server, or just via API multi-thread), you could benefit by allocating the bucket per thread. Example to change the bucket selection strategy for the vertex type "User" via SQL:

ALTER TYPE User BucketSelectionStrategy `thread`With the command above, in insertion ArcadeDB will select the physical bucket based on the thread the request is coming from. If you have enough buckets (created by default when you create a new type, but you can manually adjust it) insertions can go truly in parallel with zero contentions in pages, meaning zero exception and retries.

4.3.5. HA Settings

The following settings are used by the High Availability module:

| Setting | Description | Default Value |

|---|---|---|

|

Cluster name. Useful in case of multiple clusters in the same network |

arcadedb |

|

Servers in the cluster as a list of <hostname/ip-address:port> items separated by comma. Example: 192.168.0.1:2424,192.168.0.2:2424. If not specified, auto-discovery is enabled |

NOT DEFINED (auto discovery is enabled by default) |

|

Enforces a role in a cluster, either "any" or "replica" |

"any" |

|

Default quorum between 'none', 1, 2, 3, 'majority' and 'all' servers |

MAJORITY |

|

Timeout waiting for the quorum |

10000 |

|

The server is running inside Kubernetes |

false |

|

When running inside Kubernetes use this suffix to reach the other servers.

Example: |

|

|

Queue size for replicating messages between servers |

512 |

|

Maximum file size for replicating messages between servers |

1GB |

|

Maximum channel chunk size for replicating messages between servers |

16777216 |

|

TCP/IP host name used for incoming replication connections |

localhost |

|

TCP/IP port number (range) used for incoming replication connections |

2424-2433 |

4.4. Embedded Server

Embedding the server in your JVM allows to have all the benefits of working in embedded mode with ArcadeDB (zero cost for network transport and marshalling) and still having the database accessible from the outside, such as Studio, remote API, Postgres, REDIS and MongoDB drivers.

We call this configuration an "ArcadeDB Box".

First, add the server library in your classpath.

If you’re using Maven include this dependency in your pom.xml file.

<dependency>

<groupId>com.arcadedb</groupId>

<artifactId>arcadedb-server</artifactId>

<version>25.4.1</version>

</dependency>This library depends on arcadedb-network-<version>.jar.

If you’re using Maven or Gradle, it will be imported automatically as a dependency, otherwise please add also the arcadedb-network library to your classpath.

The arcadedb-server dependency will only start the ArcadeDB server. You will see the HTTP URL for the server along with the port number displayed, for example, http://localhost:2480. However, if you try to access this URL to see the ArcadeDB studio, you will receive a "Not Found" message. This is because the arcadedb-server dependency only adds the embedded server.

If you need to access the ArcadeDB studio to execute graph database queries, then you will need to add the following dependency:

|

<dependency>

<groupId>com.arcadedb</groupId>

<artifactId>arcadedb-studio</artifactId>

<version>25.4.1</version>

</dependency>4.4.1. Start the server in the JVM

To start a server as embedded, create it with an empty configuration, so all the setting will be the default ones:

ContextConfiguration config = new ContextConfiguration();

ArcadeDBServer server = new ArcadeDBServer(config);

server.start();To start a server in distributed configuration (with replicas), you can set your settings in the ContextConfiguration:

config.setValue(GlobalConfiguration.HA_SERVER_LIST, "192.168.10.1,192.168.10.2,192.168.10.3");

config.setValue(GlobalConfiguration.HA_REPLICATION_INCOMING_HOST, "0.0.0.0");

config.setValue(GlobalConfiguration.HA_ENABLED, true);When you embed the server, you should always get the database instance from the server itself.

This assures the database instance is just one in the entire JVM.

If you try to create or open another database instance from the DatabaseFactory, you will receive an error that the underlying database is locked by another process.

Database database = server.getDatabase(<URL>);Or this if you want to create a new database if not exists:

Database database = server.getOrCreateDatabase(<URL>);4.4.2. Create custom HTTP commands

You can easily add custom HTTP commands on ArcadeDB’s Undertow HTTP Server by creating a Server Plugin (look at MongoDBProtocolPlugin plugin implementation for a real example) and implementing the registerAPI method.

Example for the HTTP POST API /myapi/test:

package com.yourpackage;

public class MyTest implements ServerPlugin {

// ...

@Override

public void registerAPI(HttpServer httpServer, final PathHandler routes) {

routes.addPrefixPath("/myapi",//

Handlers.routing()//

.get("/account/{id}", new RetieveeAccount(this))// YOU CAN ADD YOUR HANDLERS UNDER THE SAME PREFIX PATH

.post("/test/{name}", new MyTestAPI(this))//

);

}

}You can use GET, POST or any HTTP methods when you register your handler.

Note that multiple handlers are defined under the same prefix /myapi.

Below you can find the implementation of a "Test" handler that can be called by using the HTTP POST method against the URL /myapi/test/{name} where {name} is the name passed as an argument.

Note that the MyTestAPI class is inheriting DatabaseAbstractHandler to have the database instance as a parameter.

If the user is not authenticated, the execute() method is not called at all, but an authentication error is returned. If you don’t need to access to the database, then you can extend the AbstractHandler class instead.

public class MyTestAPI extends DatabaseAbstractHandler {

public MyTestAPI(final HttpServer httpServer) {

super(httpServer);

}

@Override

public void execute(final HttpServerExchange exchange, ServerSecurityUser user, final Database database) throws IOException {

final Deque<String> namePar = exchange.getQueryParameters().get("name");

if (namePar == null || namePar.isEmpty()) {

exchange.setStatusCode(400);

exchange.getResponseSender().send("{ \"error\" : \"name is null\"}");

return;

}

final String name = namePar.getFirst();

// DO SOMETHING MEANINGFUL HERE

// ...

exchange.setStatusCode(204);

exchange.getResponseSender().send("");

}

}At startup, ArcadeDB server will initiate your plugin and register your API.

To start the server with your plugin, register the full class in

arcadedb.server.plugins setting:

Example:

$ java ... -Darcadedb.server.plugins=MyPlugin:com.yourpackage.MyPlugin ...4.4.3. HTTPS connection

In order to enable HTTPS on ArcadeDB server, you have to set the following configuration before the server starts:

configuration.setValue(GlobalConfiguration.NETWORK_USE_SSL, true);

configuration.setValue(GlobalConfiguration.NETWORK_SSL_KEYSTORE, "src/test/resources/master.jks");

configuration.setValue(GlobalConfiguration.NETWORK_SSL_KEYSTORE_PASSWORD, "keypassword");

configuration.setValue(GlobalConfiguration.NETWORK_SSL_TRUSTSTORE, "src/test/resources/master.jks");

configuration.setValue(GlobalConfiguration.NETWORK_SSL_TRUSTSTORE_PASSWORD, "storepassword");Where:

-

NETWORK_USE_SSLenable the SSL support for the HTTP Server -

NETWORK_SSL_KEYSTOREis the path where is located the keystore file -

NETWORK_SSL_KEYSTORE_PASSWORDis the keystore password -

NETWORK_SSL_TRUSTSTOREis the path where is located the truststore file -

NETWORK_SSL_TRUSTSTORE_PASSWORDis the truststore password

Note that the default port for HTTPs is configured via the global setting:

GlobalConfiguration.SERVER_HTTPS_INCOMING_PORTAnd by default starts from 2490 to 2499 (increases the port if it’s already occupied).

| if HTTP or HTTPS port are already used, the next ports are used. With the default range of 2480-2489 for HTTP and 2490-2499 for HTTPS, if the port 2480 is not available, then the next port for both HTTP and HTTPS will be used, namely 2481 for HTTP and 2491 for HTTPS |

4.5. Docker

To run the ArcadeDB server with Docker, type this (replace <password> with the root password you want to use):

$ docker run --rm -p 2480:2480 -p 2424:2424 --name my_arcadedb \

--env JAVA_OPTS="-Darcadedb.server.rootPassword=playwithdata" \

--hostname my_arcadedb arcadedata/arcadedb:latestIf there are no errors, Docker prints immediately the container id. You can use that id to stop the container, or execute some commands from it.

To run the console from the container started above, use:

$ docker exec -it my_arcadedb bin/console.sh

ArcadeDB Console v.25.4.1 - Copyrights (c) 2021 Arcade Data (https://arcadedb.com)

>

The ArcadeDB image can also be used with Podman, just replace docker with podman in the examples.

|

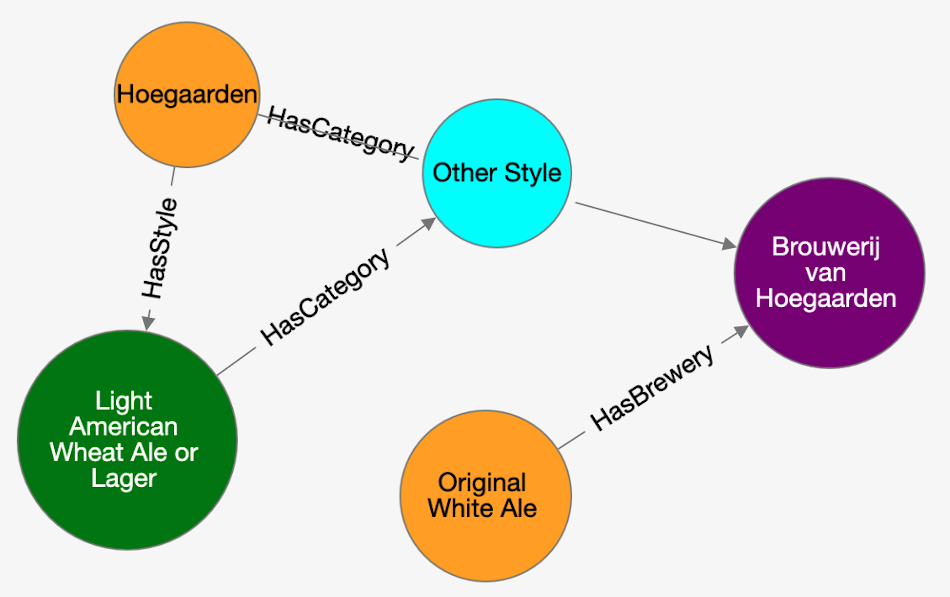

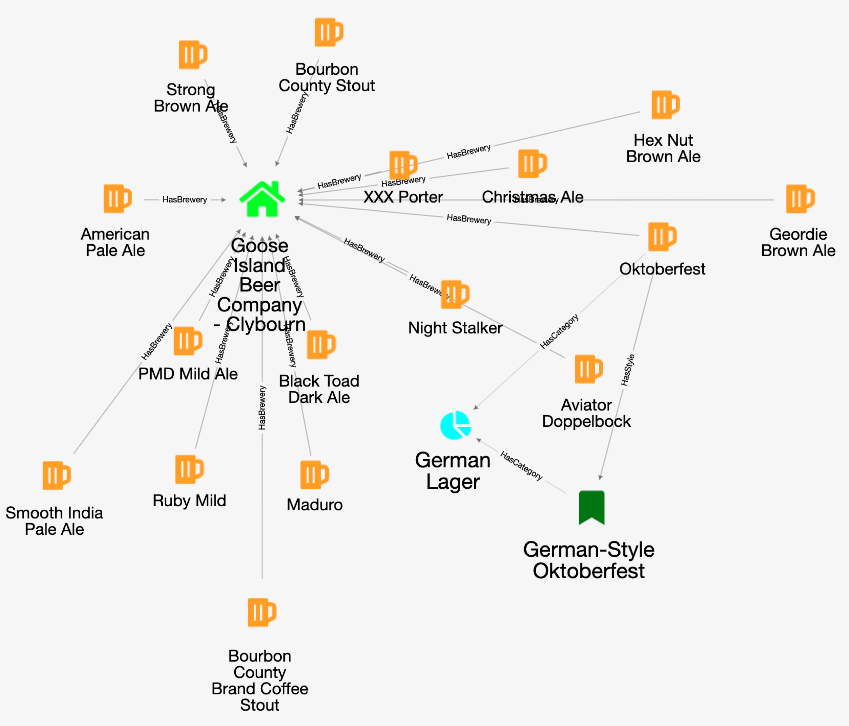

Quick start with the OpenBeer database

You can run ArcadeDB server with a demo database in less than 1 minute. Run ArcadeDB server with docker specifying the database to import as a parameter in the docker command.

Example of running ArcadeDB Server with all the plugins enabled (Redis, Postgres, Mongo, Gremlin) that download and install OrientDB’s OpenBeer dataset:

$ docker run --rm -p 2480:2480 -p 2424:2424 -p 6379:6379 -p 5432:5432 -p 8182:8182 --env JAVA_OPTS="\

-Darcadedb.server.rootPassword=playwithdata \

-Darcadedb.server.defaultDatabases=Imported[root]{import:https://github.com/ArcadeData/arcadedb-datasets/raw/main/orientdb/OpenBeer.gz} \

-Darcadedb.server.plugins=Redis:com.arcadedb.redis.RedisProtocolPlugin, \

MongoDB:com.arcadedb.mongo.MongoDBProtocolPlugin, \

Postgres:com.arcadedb.postgres.PostgresProtocolPlugin, \

GremlinServer:com.arcadedb.server.gremlin.GremlinServerPlugin" \

arcadedata/arcadedb:latestNow point your browser on http://localhost:2480 and you’ll see ArcadeDB Studio. Now enter "root" as a user and "playwithdata" as a password.

| User and password are specified in the docker command above. |

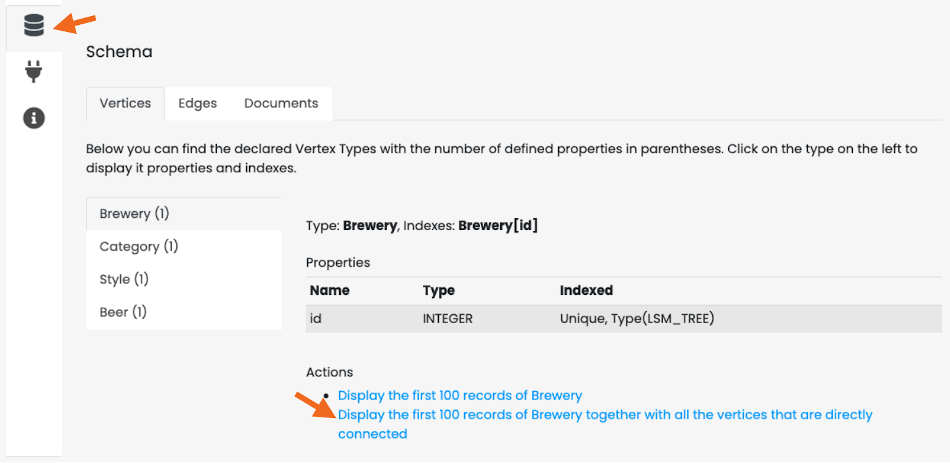

Now click on the "Database" icon on the toolbar on the left. This is the database schema. Click on "OpenBeer" vertex type and then on the action "Display the first 100 records of Beer together with all the vertices that are directly connected".

You should see the first 100 beers in the database and all their connections.

Persistence

There are multiple options to make databases in an ArcadeDB container persistent, yet the practical choice is highly dependent on the application and environment.

On the Docker side there are two typical options to persist data in a container:

-

A Docker volume which is a Docker managed section of the host’s filesystem. This would be the preferred option if the host machine is to store the persistent data.

-

A Bind mount which is a user-managed folder of the host’s filesystem. This can be used if the target volume is itself mounted on the host like a network share.

See the Docker storage documentation on how to set up volumes or mounts.

On the ArcadeDB side there are basically two options to consider:

-

Store the database itself on an external volume. In this case all read and write operations from and to a database take place on the specified volume or mount, which can entail performance limitations. Use the setting

-Darcadedb.server.databaseDirectory=/mydatabasesto specify the mount-point of the volume or mount as absolute path to ArcadeDB. -

Store only backups on an external volume. In this approach all read and write operation from and to a database happen in the storage provided by Docker to the container. This is faster if the host can provide local storage. However, the user needs to take care of regular backups, see

BACKUP DATABASE, for which the default backup folder can be set by-Darcadedb.server.backupDirectory=/mybackupto specify the mount-point of the volume or mount as absolute path to ArcadeDB.

| Which combination is most suitable depends on the computational environment. For example, "local storage" can have different meanings on a bare metal machine, a virtual machine or container cluster. |

Tuning

In general, the RAM allocated for the JVM should be ≤80% of the container RAM. The default Dockerfile for ArcadeDB sets 2 GB of RAM for ArcadeDB (-Xms2G -Xmx2G), so you should allocate at least 2.3G to the Docker container running exclusively ArcadeDB.

To run ArcadeDB with 1G docker container, you could start ArcadeDB by using 800M for ArcadeDB’s server RAM by setting ARCADEDB_OPTS_MEMORY variable with Docker:

$ docker ... -e ARCADEDB_OPTS_MEMORY="-Xms800M -Xmx800M" ...To run ArcadeDB with RAM <800M, it’s suggested to tune some settings. You can use the low-ram profile to use the least memory possible.

$ docker ... -e ARCADEDB_OPTS_MEMORY="-Xms800M -Xmx800M" -e arcadedb.profile=low-ram ...4.6. Kubernetes

Before starting the Kubernetes (K8S) cluster, set ArcadeDB Server root password as a secret (replace <password> with the root password you want to use):

$ kubectl create secret generic arcadedb-credentials --from-literal=rootPassword='<password>'This will set the password in the rootPassword environment variable. The ArcadeDB server will use this password for the root user.

Now you can start a Kubernetes cluster with 3 servers by using the default configuration:

$ kubectl apply -f config/arcadedb-statefulset.yamlFor more information on ArcadeDB Kubernetes config please check.

In order to scale up or down with the number of replicas, use this:

$ kubectl scale statefulsets arcadedb-server --replicas=<new-number-of-replicas>Where the value of <new-number-of-replicas> is the new number of replicas. Example:

$ kubectl scale statefulsets arcadedb-server --replicas=3Scaling up and down doesn’t affect current workload. There are no pauses when a server enters/exits from the cluster.

4.6.1. Helm Charts

To facilitate the deployment of ArcadeDB on a Kubernetes cluster a Helm chart can be used.

To install the template chart with the release name my-arcadedb enter:

helm install my-arcadedb ./arcadedbThe command deploys ArcadeDB on the Kubernetes cluster using the default configuration.

To uninstall/delete the my-arcadedb deployment:

helm uninstall my-arcadedbThe command removes all the Kubernetes components associated with the chart and deletes the release. Details about the configurable parameters of the template chart, as well as persistence, ingress, and resource, see the dedicated README.

4.6.2. Troubleshooting

The most common issue with using K8S is with the setting of the root password for the server (see at the beginning of this section).

Following are some useful commands for troubleshooting issues with ArcadeDB and Kubernetes.

Display the status of a pod:

$ kubectl describe pod arcadedb-0Replace "arcadedb-0" with the server container you want to use.

To display the logs of the first server:

$ kubectl logs arcadedb-0Replace "arcadedb-0" with the server container you want to use.

To remove all the containers and restart the cluster again, execute these 2 commands:

$ kubectl delete all --all --namespace default

$ kubectl apply -f config/arcadedb-statefulset.yaml5. Studio

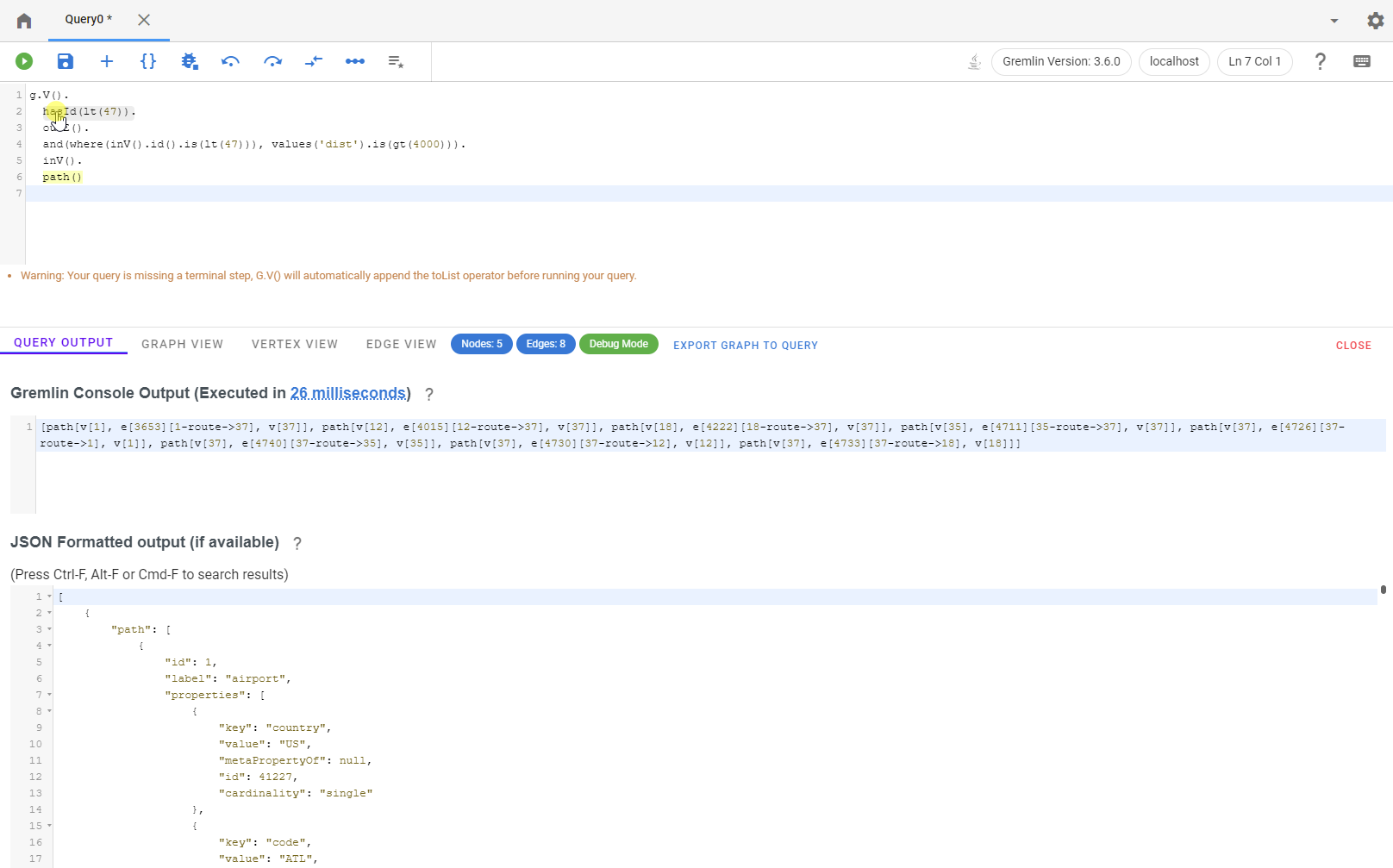

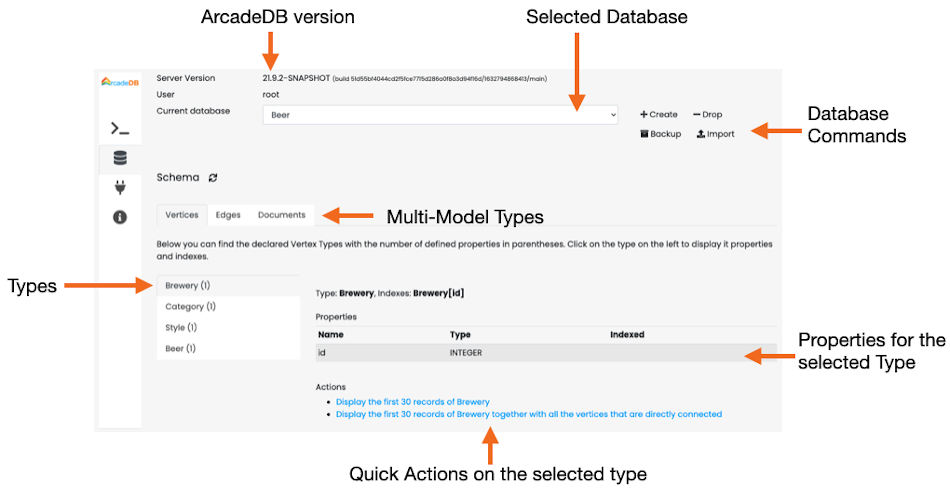

Studio is the web tool that comes bundled with ArcadeDB Server. It starts automatically on port 2480. If you have installed ArcadeDB, and it’s running on your local computer, then you can access Studio at http://localhost:2480. Replace "localhost" with the host name or IP address of the server where ArcadeDB server is running.

If you’re running ArcadeDB server as Embedded, please make sure the jar arcadedb-studio-<version>.jar is included in your classpath.

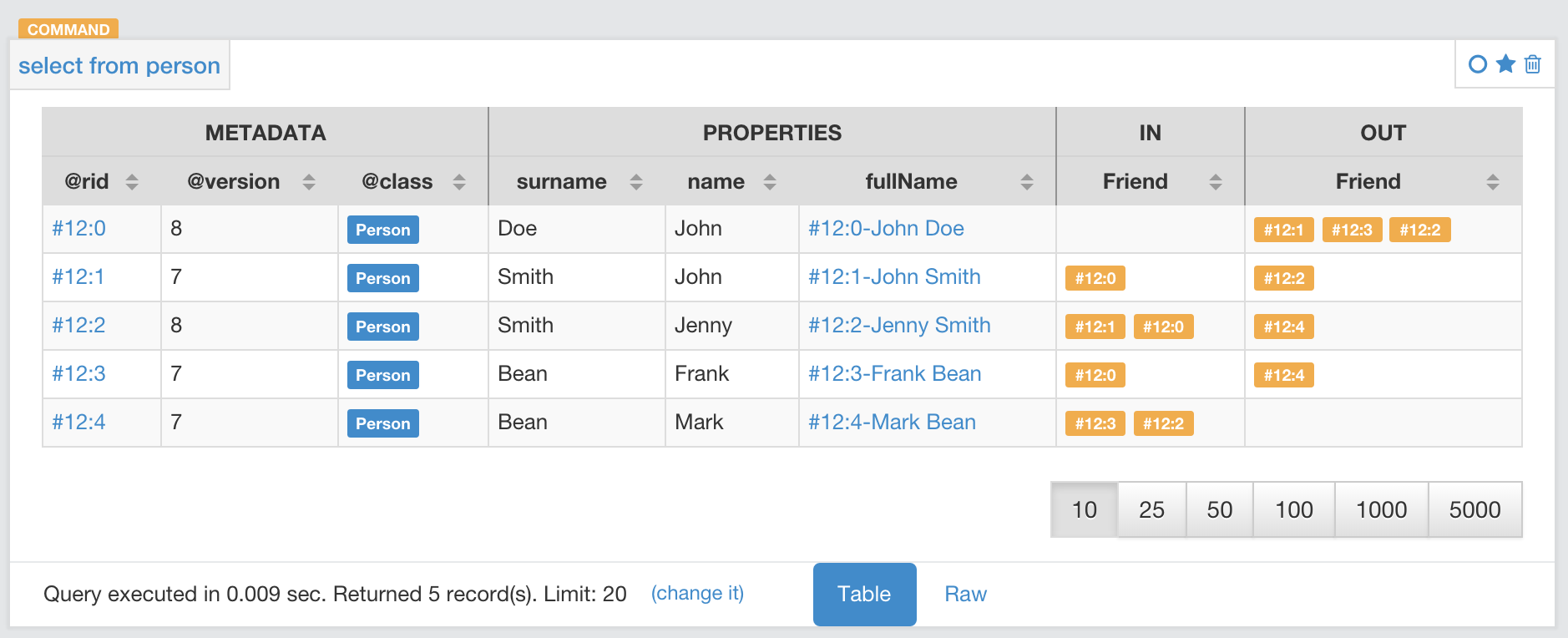

Command

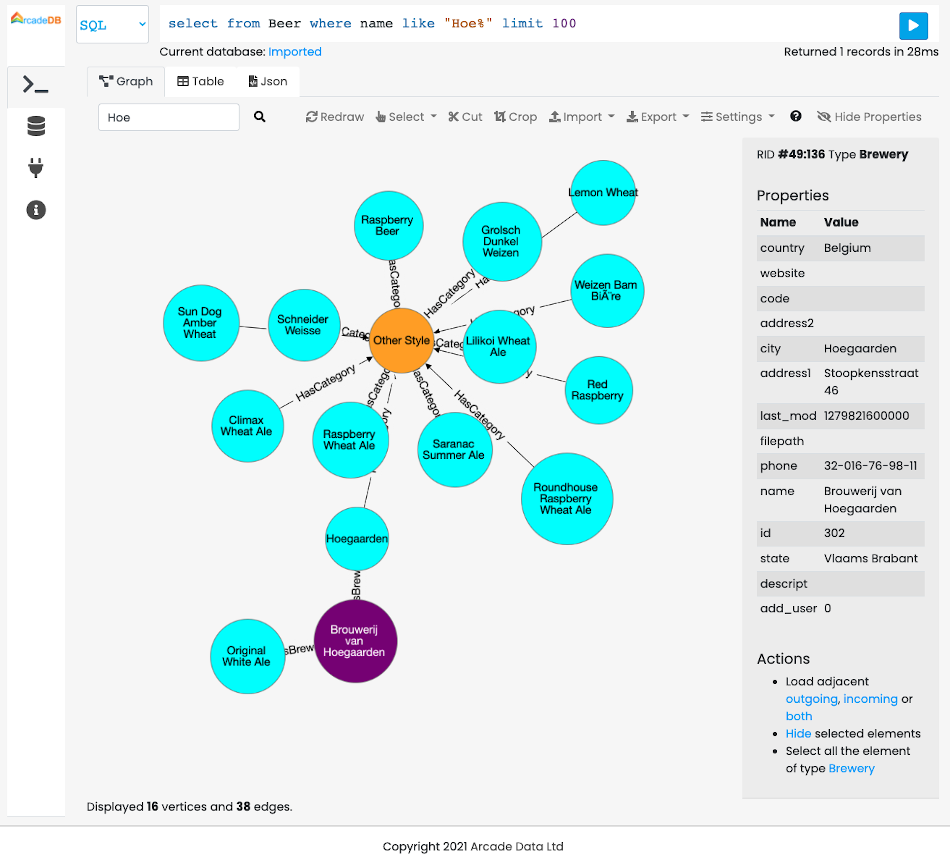

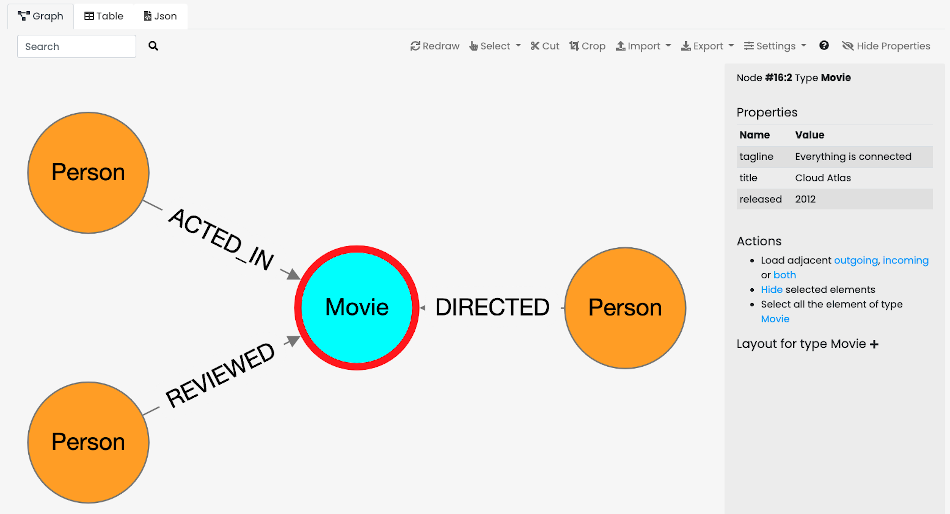

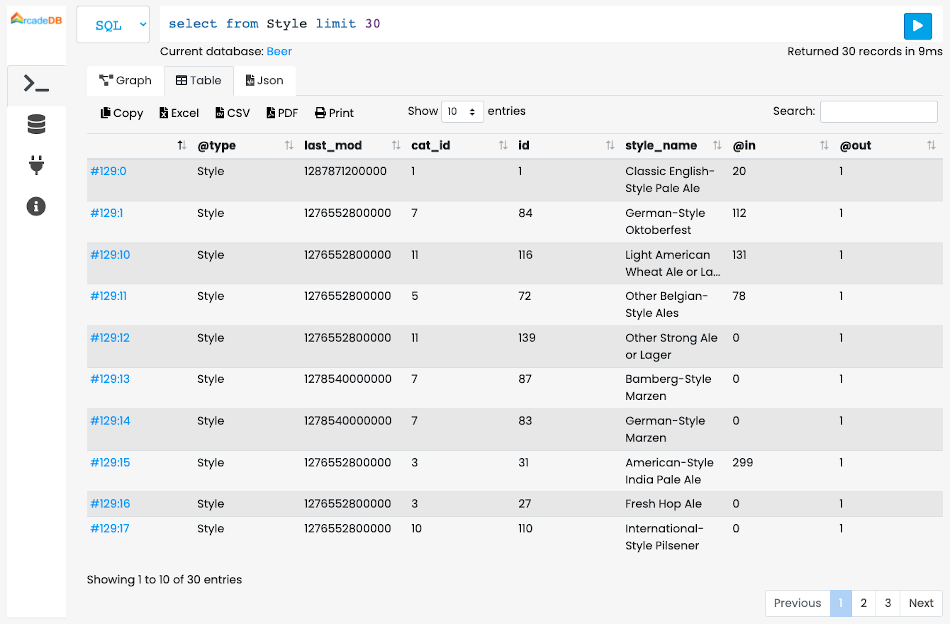

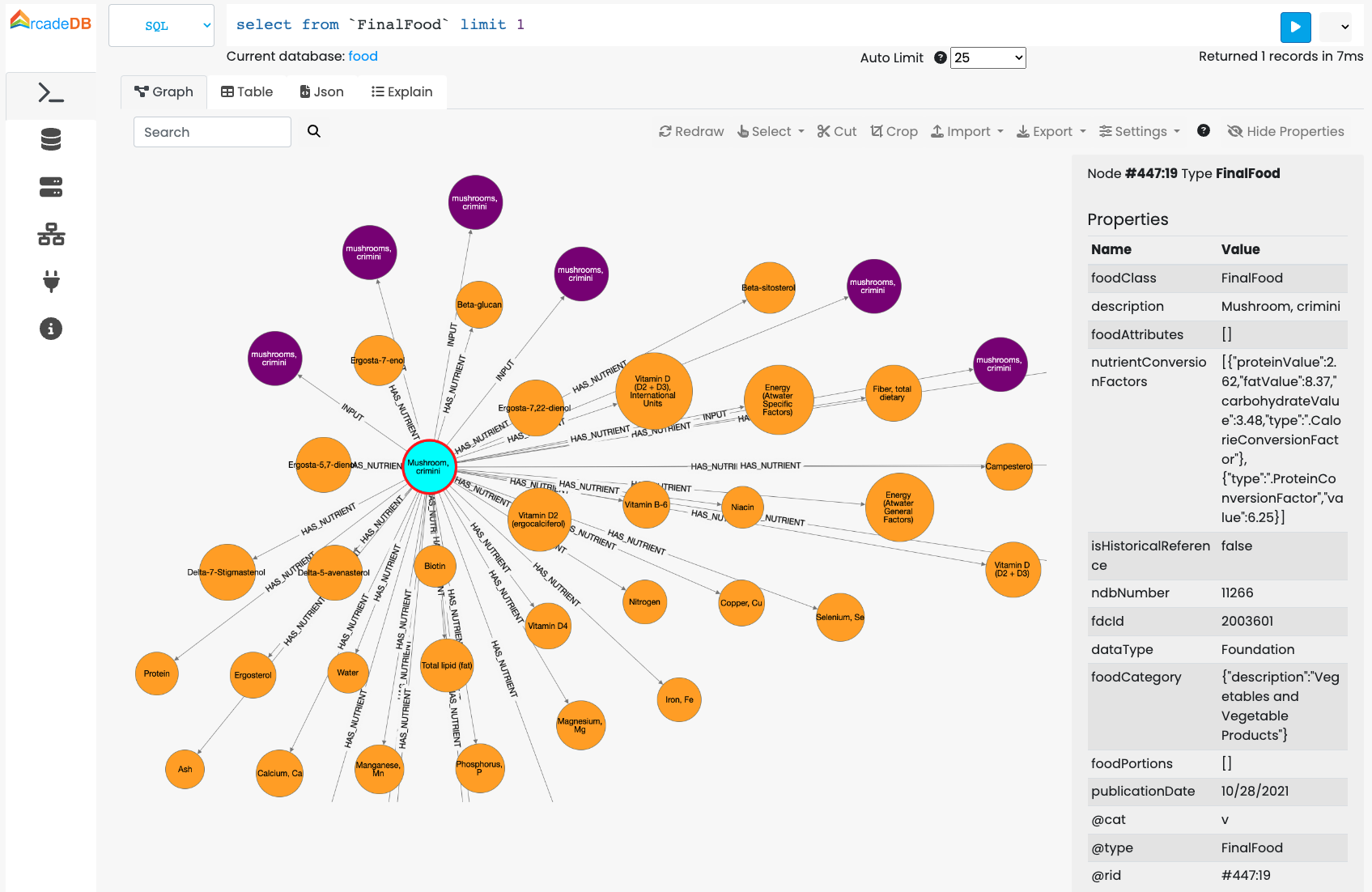

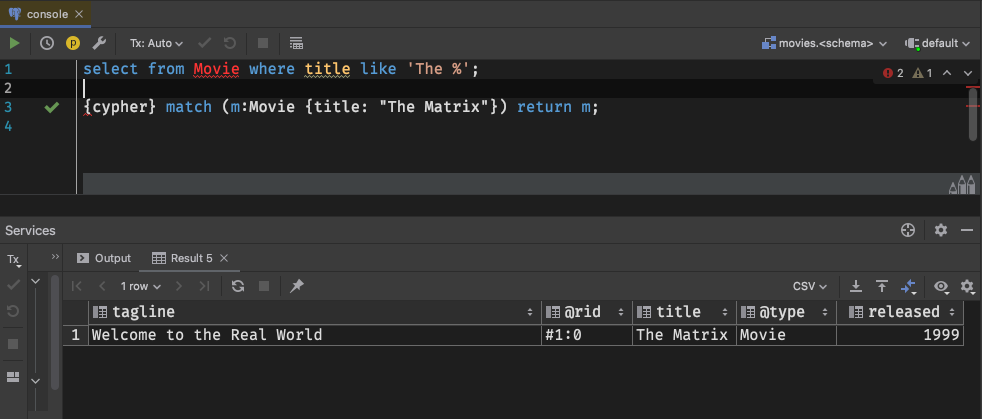

The first and most important panel in Studio is the Command panel. Below you can find a screenshot with the main components.

-

Main Menu is the vertical tab with the following options:

-

Command, the current panel to execute commands against the database

-

Database, containing the information about the selected database and its schema. From this panel you can switch to a different database

-

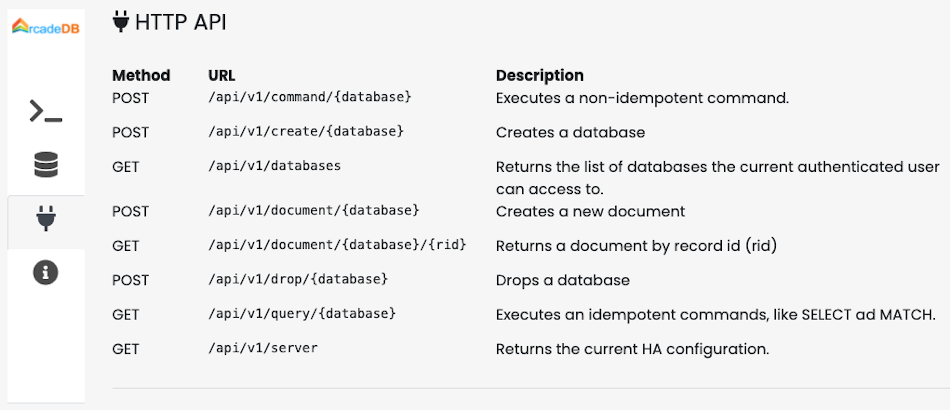

API, with the description of the public HTTP API exposed on the current server

-

Information, containing a quick reference to the online documentation

-

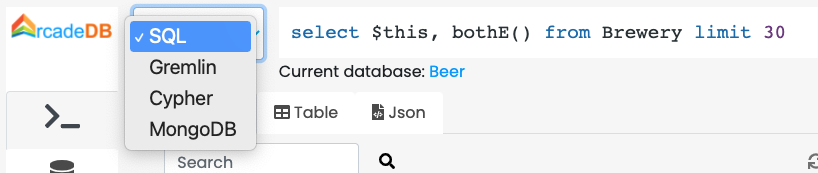

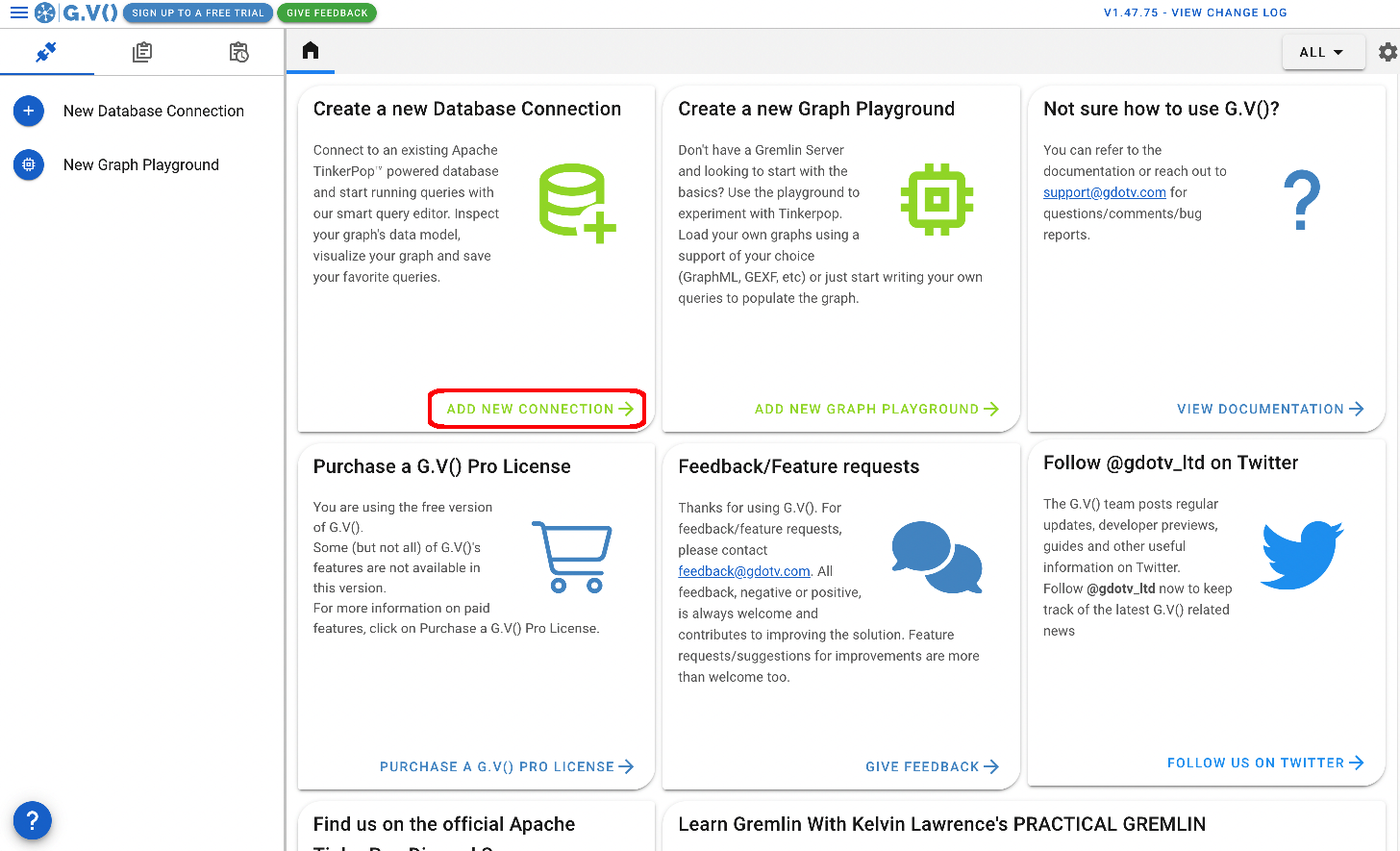

Execute a command/query

In order to execute a command (or query), select the language first. By default is SQL, but you can choose between:

-

SQL (for any models, including graphs and documents)

-

SQL Script (multiple commands/queries)

-

Apache Tinkerpop Gremlin (only for graphs)

-

Open Cypher (only for graphs)

-

MongoDB (only for documents)

-

GraphQL

Based on the selected language, the command text area will adjust the syntax highlighting to simplify the writing of the command.

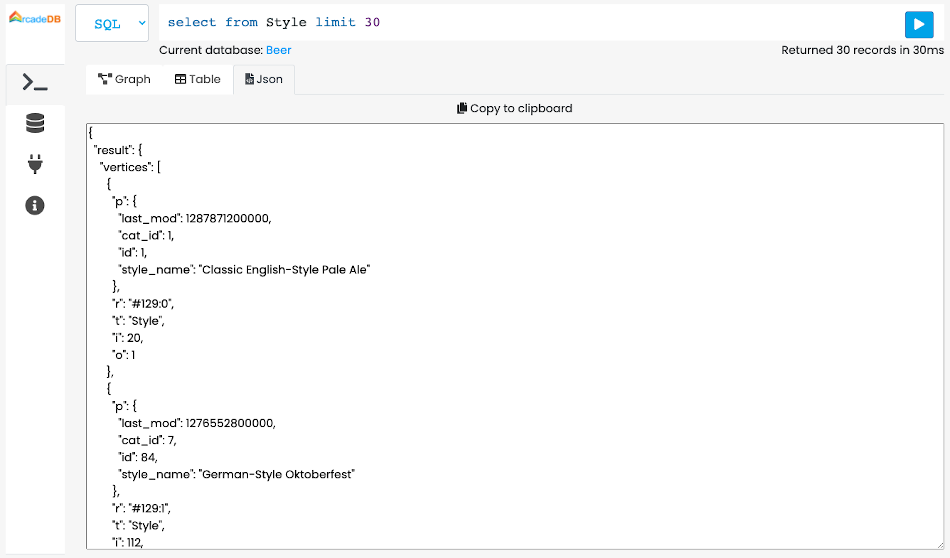

The result of the command will appear in the Command Result area as a Graph a Table or JSON Panel.

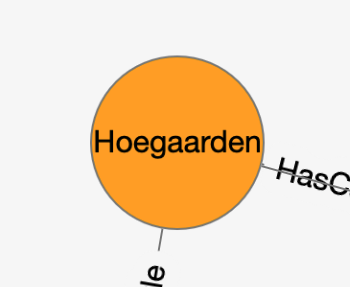

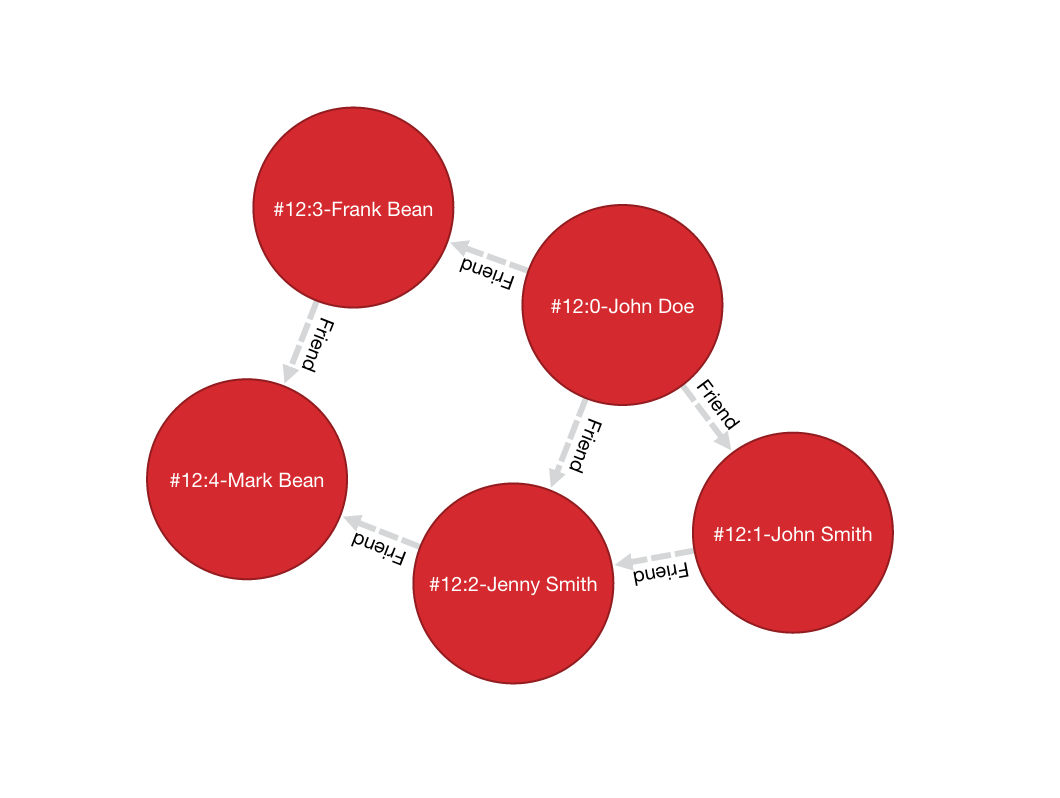

Graph Panel

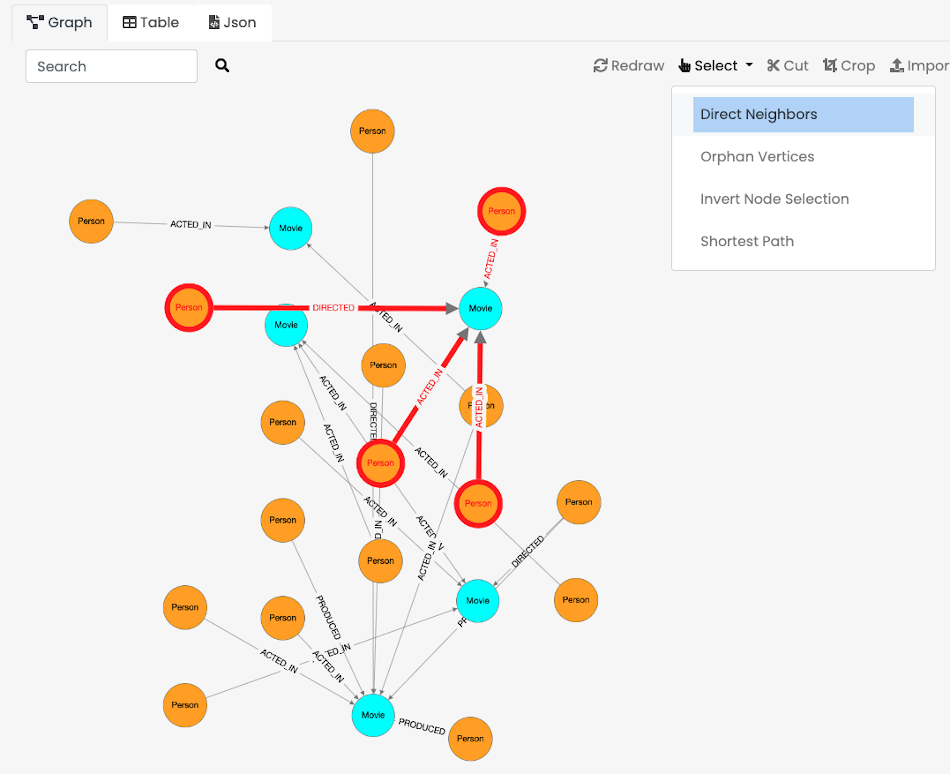

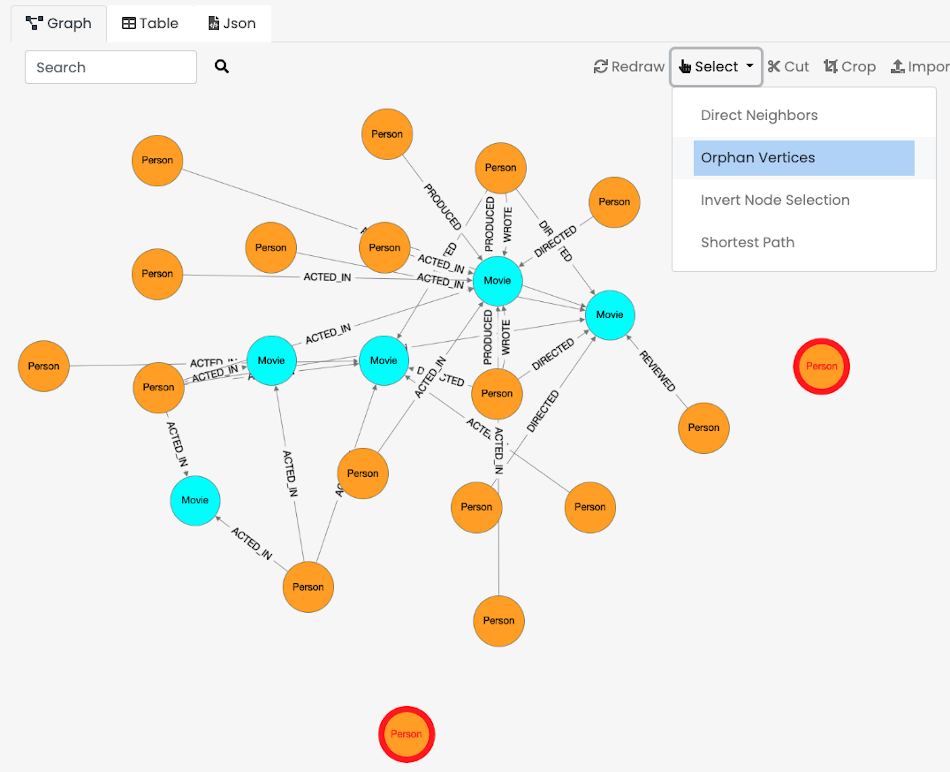

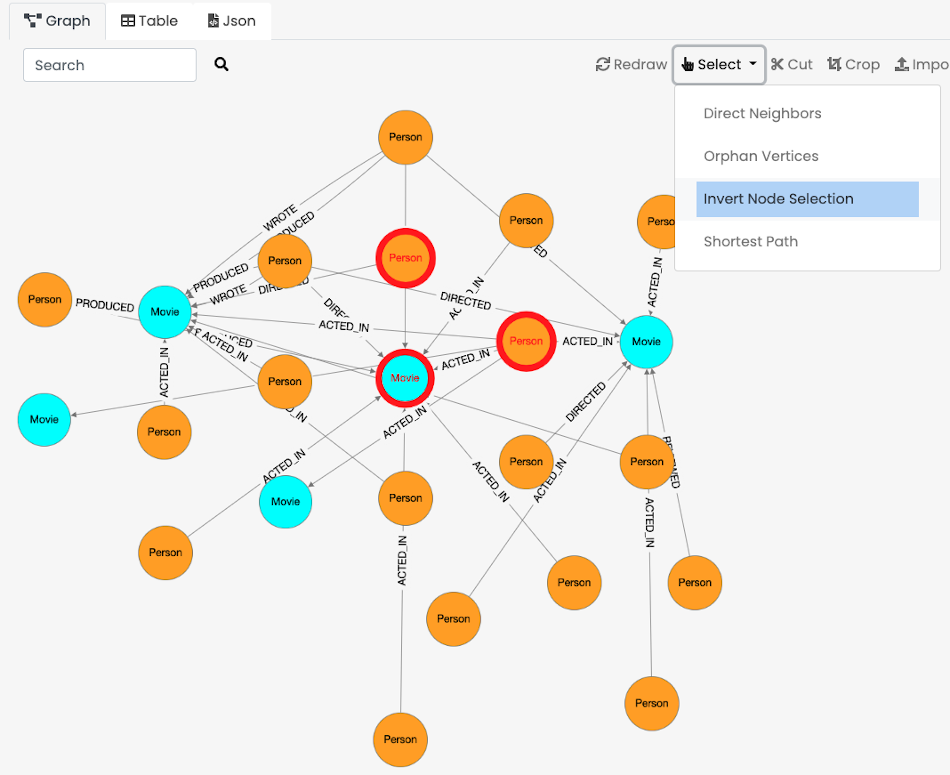

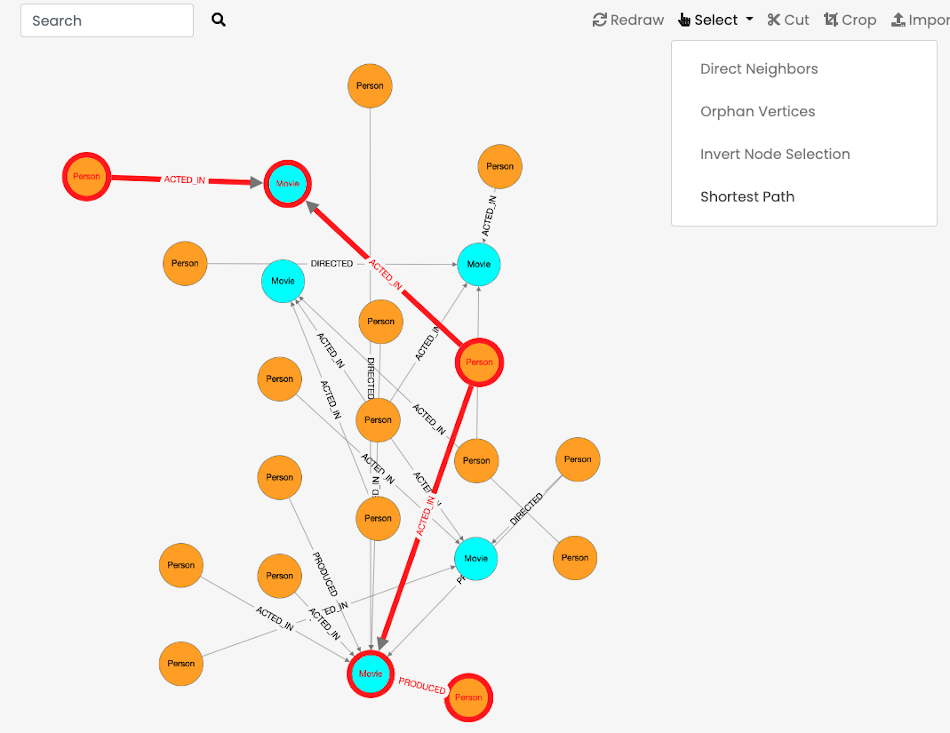

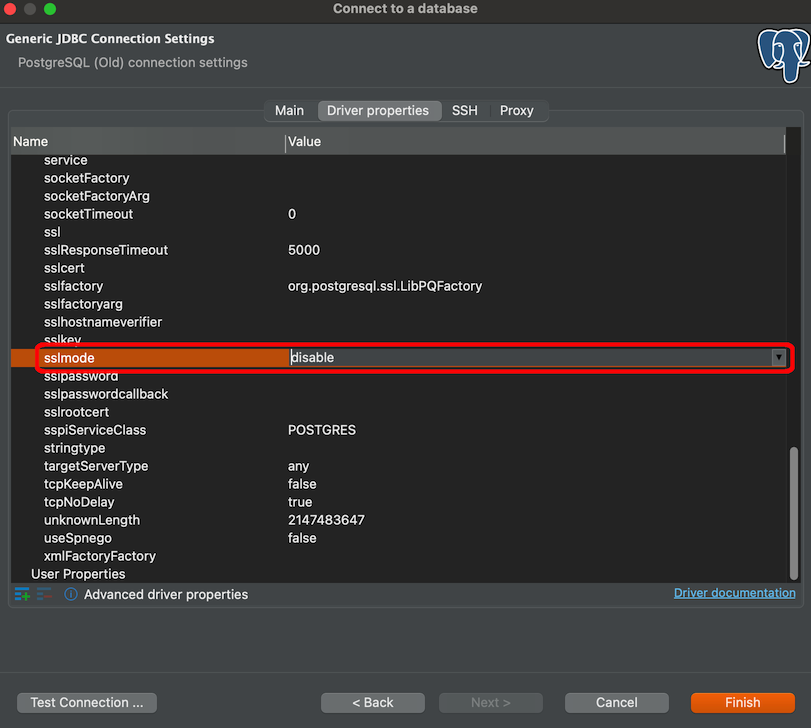

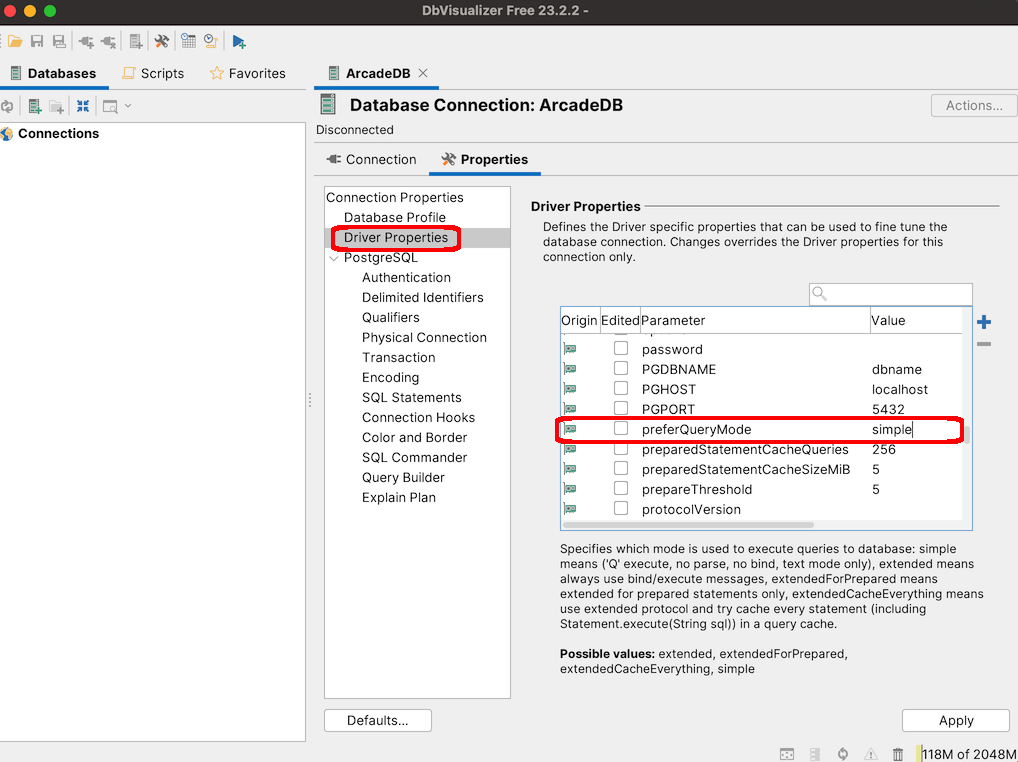

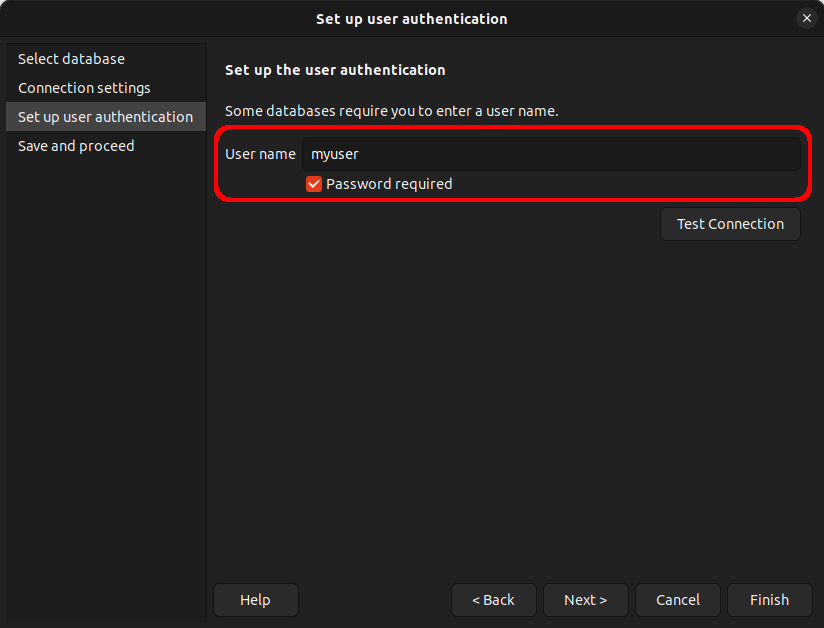

|